Keyword Decisions In Sponsored Search Advertising

Keyword Decisions in Sponsored Search Advertising: A Literature Review

Abstract. In sponsored search advertising (SSA), keywords serve as the basic unit of business model, linking three stakeholders: consumers, advertisers and search engines. This paper presents an overarching framework for keyword decisions that highlights the touchpoints in search advertising management, including four levels of keyword decisions, i.e., domain-specific keyword pool generation, keyword targeting, keyword assignment and grouping, and keyword adjustment. Using this framework, we review the state-of-the-art research literature on keyword decisions with respect to techniques, input features and evaluation metrics. Finally, we discuss evolving issues and identify potential gaps that exist in the literature and outline novel research perspectives for future exploration.

Keyword: keyword decisions, sponsored search advertising, keyword generation, keyword targeting,

1. Introduction

Section titled “1. Introduction”Sponsored search advertising (SSA) has become one of the most successful business models of online advertising. Millions of advertisers spent a large amount of advertising budgets in SSA to promote their products and services (Yang et al., 2018). According to a recent IAB report (Interactive Advertising Bureau, 2022), in the United States alone, the annual internet advertising revenue of 2021 reached $189.3 billion where SSA accounts for around 41.4% of that pie.

In SSA, firms need to choose suitable keywords to describe their products or services efficiently, and organize these keywords following certain advertising structures (e.g., account, ad-campaign, and ad-group) defined by major search engines. Once a user submits a query to a search engine which is related to one or several of these keywords, it triggers an auction process that determines which advertisements and their rankings to be displayed on search engine result pages (SERPs), together with a set of organic search results. In the SSA ecosystem, keywords are the unique carriers connecting advertisers, potential consumers, and search engines. Moreover, keywords are the basic units for advertisers to conduct online market research, design and evaluate marketing strategies. Keywords play a crucially important role in business competition for companies in online platforms. In practice, advertisers have to make various keyword decisions throughout the entire lifecycle of SSA campaigns (Yang et al., 2019). Therefore, it becomes a critical issue for search advertisers to make a series of effective keyword decisions in SSA.

Since the advent of SSA, keyword decisions have increasingly attracted research interests from both academia and industries. As far as we knew, on one hand, it is apparent that there are no commonly agreed definitions for related concepts identified in the extant literature on keyword decisions; on the other hand, prior research on keyword decisions has been conducted either separately on an individual keyword decision or without consideration of search advertising structures. There is a need for developing an integrated review of the state-of-the-art knowledge about keyword decisions in SSA. Our objectives for this paper are to examine what has been done in the literature on keyword decisions, uncover the potential gaps, and figure out novel research perspectives for future exploration.

This review complements recent review articles on online advertising and advertising selection. Ha (2008) conducted a review of online advertising published in major advertising journals from 1996 to 2007, which focuses on analyzing conceptual foundations, theories, and state-of-the-art practices of online advertising. Shatnawi & Mohamed (2012) presented an overview of online advertising selection, which focuses on investigating existing approaches, comparing and classifying these approaches. Our review focuses on keyword decisions in SSA from the system perspective, by taking into account search advertising structures and the entire lifecycle of advertising campaigns.

The contribution of our review can be summarized in the following ways. First, to the best of our knowledge, this review is the first effort focusing on keyword decisions in SSA, which has not been systematically explored before. We present an overarching framework for keyword decisions based on practical decision scenarios throughout the entire lifecycle of SSA campaigns, and conduct a systematic review of the state-of-the-art literature with respect to techniques, input features and evaluation metrics. Second, we find that a lot of practical issues remain unaddressed in this field, although plenty of research efforts have been involved in the last two decades. In particular, few research efforts reported on practical keyword decisions such as keyword targeting, keyword assignment and grouping, and keyword adjustment. In addition, our review will provide foundations for continuing studies on keyword decisions in SSA and other advertising forms.

2. Survey Scope and Structure

Section titled “2. Survey Scope and Structure”2.1 Survey Scope

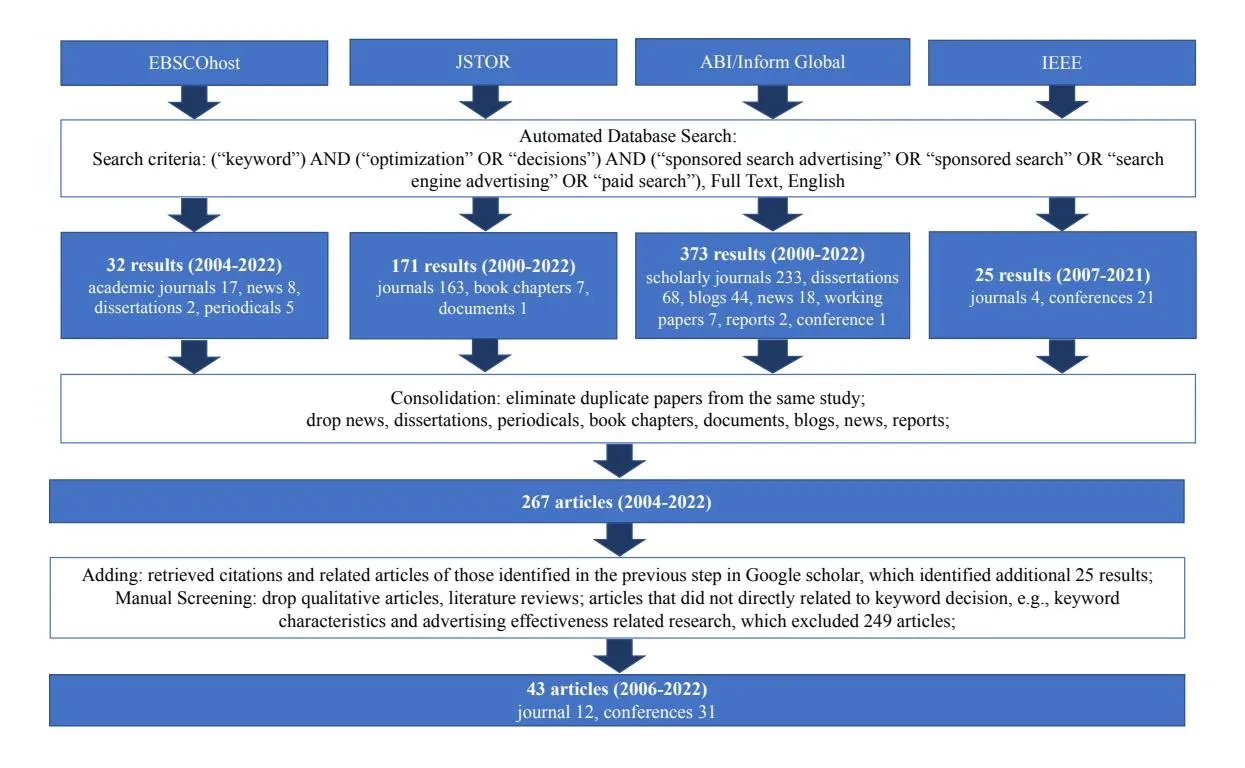

Section titled “2.1 Survey Scope” Figure 1. Study Search and Selection

Figure 1. Study Search and Selection

Due to the interdisciplinary nature of keyword decisions topics, research articles covered in this review were published in major IT-oriented (e.g., computer science, artificial intelligence and information retrieval) and/or business-oriented (e.g., management information systems, advertising and marketing) journals and conferences. Our review includes articles retrieved mainly from four academic databases: EBSCOhost, JSTOR, ABI-Inform and IEEE using full text search of (“keyword”) AND (“optimization” OR “decisions”) AND (“sponsored search advertising” OR “sponsored search” OR “search engine advertising” OR “paid search”). We restricted our selected studies in English. The search resulted in 32 results from EBSCOhost, 171 results from JSTOR, 373 results from ABI/Inform Global and 25 results from IEEE. We manually screened the results to eliminate duplicate articles, news, dissertations, periodicals, book chapters, documents, blogs, news and reports. This process led to 267 articles. Moreover, we expanded the literature by retrieving citations of the articles obtained in the previous step in Google scholar, which yielded additional 25 results. Furthermore, we dropped qualitative articles, literature reviews and empirical articles on keyword research, by going through title, abstract, full-text of each article, which excluded 249 articles. Finally, this literature search resulted in a selection of 43 research publications, including 12 peer-reviewed journal articles and 31 conference articles, covering the period from 2006 to 2022. Our search process is illustrated in Fig. 1.

2.2 Survey Structure

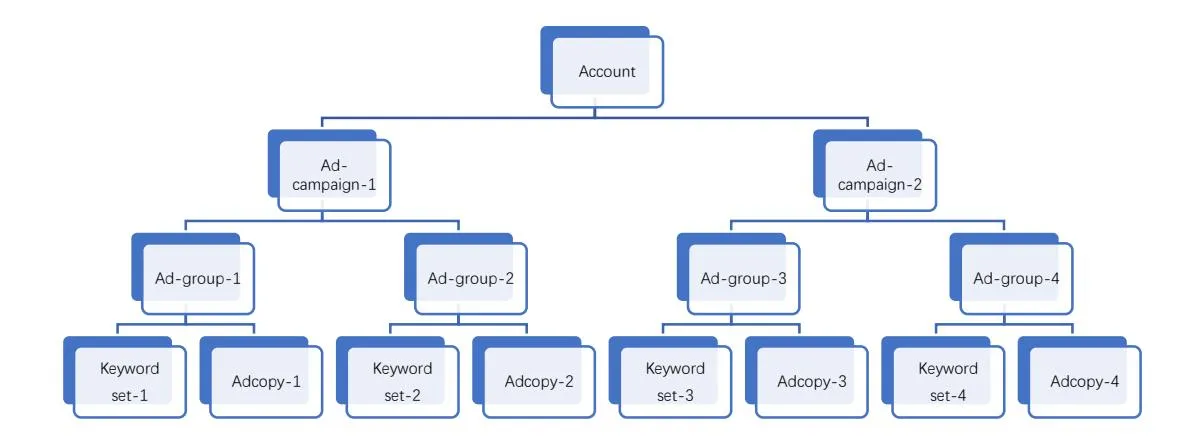

Section titled “2.2 Survey Structure”In SSA, a general advertising structure employed by major search engines (e.g., Google, Bing) can be described as: under a SSA account of an advertiser, there are one or several campaigns that are run simultaneously in order to fulfill a promotional goal; in the meanwhile, one or several ad-groups comprise a campaign, and each ad-group consists of one or more ad-copies and a set of keywords. Fig. 2 presents an illustration of the search advertising structure. In this sense, SSA is essentially distinct from traditional advertising (e.g., print ads and TV ads) due to its hierarchical advertising structure. Thus, advertising decisions in SSA are essentially structured, rather than flatted as in traditional advertising (Yang et al., 2012, 2019).

Figure 2. An Illustration of Sponsored Search Advertising Structure

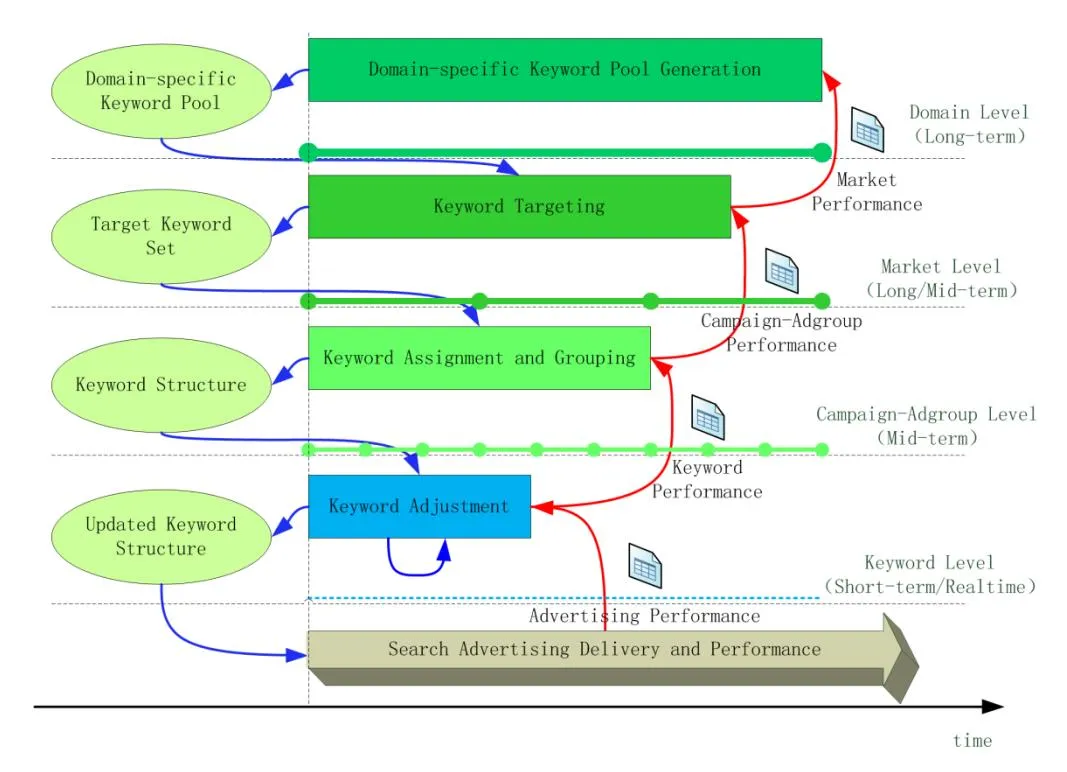

Throughout the entire lifecycle of SSA campaigns, advertisers have to make a series of keyword related decisions at different levels, namely keyword generation at the domain level, keyword targeting at the market level, keyword assignment and grouping at the campaign and ad-group level, and dynamical keyword adjustment, forming a closed-loop decision cycle (Yang et al., 2019). In thisreview, we organize the extant literature on keyword decisions within a research framework, as presented in Fig. 3.

Figure 3. The Framework of Our Literature Review on Keyword Decisions

Section 3 centers on keyword generation, which is also known as the domain-level keyword optimization, aiming to generate a domain-specific keyword pool. In this section, after defining the keyword generation problem, we go over techniques, features and evaluation metrics used in the literature on keyword generation.

Section 4 discusses keyword targeting, which is also known as the market-level keyword optimization, aiming to obtain a more targeted set of keywords from the domain-specific keyword pool and choose appropriate match types for selected keywords in order to reach the right population of potential consumers. In this section, we first define the keyword targeting problem, and then review techniques, features and evaluation metrics for keyword selection and keyword match.

Section 5 focuses on keyword assignment and grouping, which is also known as the campaign and ad-group level keyword optimization, aiming to yield an effective keyword structure by following the advertising structure of SSA. In this section, we first define the problem of keyword assignment and grouping, and then introduce techniques, features and evaluation metrics used in this area.

Section 6 concerns keyword adjustment, i.e., advertisers have to dynamically adjust their keyword structures and associated strategies according to the realtime advertising performance of SSA campaigns. In this section, we first define the keyword adjustment problem in more detail, and then we discuss the status of this area.

Section 7 summarizes the state-of-the-art keyword decisions, uncovers the gaps that exist in the literature, and suggests promising research perspectives for future exploration. Finally, we conclude our review in Section 8.

Notations used in problem definitions for keyword decisions are presented in Table 1.

Table 1. Notations in problem definitions for keyword decisions

| Terms | Definition |

|---|---|

| (𝐺𝑁𝑇) 𝑓 | The keyword generation function |

| (𝐺𝑁𝑇) 𝑆 | Information source for keyword generation |

| (𝐺𝑁𝑇) 𝐾 | A set of generated keywords |

| 𝑘𝑖 | The 𝑖-th keyword in a set |

| (𝐺𝑁𝑇) 𝑛 | The number of generated keywords |

| (𝑇𝐺𝑇) 𝑓 | The keyword targeting function |

| (𝑇𝐺𝑇) 𝑛 | The number of targeting keywords |

| (𝑇𝐺𝑇) 𝐾 | A set of selected keywords |

| (𝑇𝐺𝑇) 𝑥𝑖,𝑚̅ | A binary decision variable of keyword targeting, indicating whether the 𝑖-th |

| keyword in match type 𝑚̅ is selected | |

| 𝑚̅ | Keyword match type |

| (𝐴𝑆𝑀) 𝑓 | The keyword assignment function |

| (𝐴𝑆𝑀) 𝐾𝑗 | A set of keywords assigned to the 𝑗-th campaign |

| A binary decision variable of keyword assignment, indicating whether the 𝑖-th | |||

|---|---|---|---|

| (𝐴𝑆𝑀) 𝑥𝑖,𝑗 | keyword is assigned to the 𝑗-th campaign | ||

| 𝑛𝑐𝑎𝑚𝑝𝑎𝑖𝑔𝑛 | The number of ad campaigns | ||

| (𝐺𝑅𝑃) 𝑓 | The keyword grouping function | ||

| (𝐺𝑅𝑃) 𝐾𝑗,𝑙 | A set of keywords for the 𝑙-th ad-group of the 𝑗-th campaign | ||

| (𝐺𝑅𝑃) | A binary decision variable of keyword grouping, indicating whether the 𝑖-th keyword | ||

| 𝑥𝑖,𝑗,𝑙 | is grouped into the 𝑙-th ad-group of the 𝑗-th campaign | ||

| 𝑛𝑔𝑟𝑜𝑢𝑝 | The number of ad-groups | ||

| (𝐴𝐷𝐽) 𝑓 | The keyword adjustment function | ||

| (𝐴𝐷𝐽) | A binary decision vector of keyword adjustment, indicating whether the 𝑖-th keyword | ||

| 𝑥𝑖,𝑗,𝑙,𝑡 | is grouped into the 𝑙-th ad-group of the 𝑗-th campaign at time 𝑡 | ||

| (𝐴𝐷𝐽) 𝐾𝑗,𝑙,𝑡 | A set of keywords grouped into the 𝑙-th ad-group of the 𝑗-th campaign at time 𝑡 |

3. Domain-Specific Keyword Pool Generation

Section titled “3. Domain-Specific Keyword Pool Generation”3.1 Problem Description

Section titled “3.1 Problem Description”For advertisers in an application domain, they need to build a pool of relevant keywords over which they can conduct marketing research and from which a more accurate set of keywords can be determined for their search advertising campaigns. This step is called keyword generation, and its output is the domain-specific keyword pool.

Formally, the keyword generation problem can be defined as follows. Let () denote information sources for keyword generation such as a corpus of Web pages, query logs, search results, advertising database, domain-specific semantics and concept hierarchy, the keyword generation process can be given as

(1)

where () denotes the keyword generation function, () denotes a set of generated keywords and denotes the -th keyword in the set.

Generally, keyword pool generation aims to obtain a set of keywords that represents the domainspecific knowledge and information of the targeted market as comprehensive and relevant as possible (Nie et al., 2019), starting with websites (or Web pages) or a set of seed keywords provided by advertisers. In this sense, keyword generation is also known as keyword expansion in that it expands from one or several seed keywords. The generated set of keywords can be viewed as an extended business description for advertisers.

A plethora of academic efforts have been devoted to keyword generation, in order to help advertisers reach potential consumers (e.g., Yih et al., 2006; Thomaidou and Vazirgiannis, 2011; Nie et al., 2019; Scholz et al., 2019; Yang et al., 2019). Note that the keyword generation problem of SSA is essentially similar to that of online contextual advertising in that both are intended to obtain a set of keywords of interest to consumers. Thereby, prior research in this direction did not make a distinction between them. Hence, we follow such a convention in this paper by reviewing keyword generation for the two advertising contexts within a same framework. A related research stream to keyword generation is query expansion in the field of information retrieval. For an extensive review on query expansion, refer to see Carpineto & Romano (2012) and Azad & Deepak (2019).

In the following, we present major techniques, and then discuss input features and evaluation metrics used in the literature.

3.2 Techniques for Keyword Generation

Section titled “3.2 Techniques for Keyword Generation”State-of-the-art keyword generation techniques reported in the SSA literature can be categorized into five groups: (1) co-occurrence statistics, (2) similarity measures, (3) multivariate models, (4) linguistics processing models, and (5) machine learning models. Table 2 presents categories of state-of-the-art keyword generation techniques and the distribution of selected studies in this section. In the following, we will go over the specific keyword generation techniques in each category, and analyze their advantages and disadvantages.

Table 2. Techniques for Keyword Generation

| Category | Approach | Reference | Sources |

|---|---|---|---|

| Co-occurrence | / | Zhou et al. (2007) | Websites and Web pages |

| statistics | |||

| Similarity | The Jaccard similarity | Joshi & Motwani (2006) | Search result snippets |

| measures | Mirizzi et al. (2010) | Semantics and concept hierarchy, | |

| search result snippets | |||

| Chen et al. (2008) | Semantics and concept hierarchy | ||

| The cosine similarity | Abhishek & Hosanagar | Websites and Web pages, search | |

| (2007) | result snippets | ||

| Chang et al. (2009); | Advertising databases | ||

| Sarmento et al. (2009) | |||

| Thomaidou & Vazirgiannis | Search result snippets, websites | ||

| (2011) | and Web pages |

| Semantic similarity between terms | Amiri et al. (2008) | Semantics and concept hierarchy, search result snippets, query logs | |

|---|---|---|---|

| Multivariate models | Logistic regression | Yih et al. (2006); Wu &Bolivar (2008); Lee et al. (2009) | Websites and Web pages |

| Berlt et al. (2011) | Advertising databases | ||

| Bartz et al. (2006) | Query logs, advertising databases | ||

| Collaborative filtering | Bartz et al. (2006) | Query logs, advertising databases | |

| Topic-sensitive PageRank | Zhang et al. (2012a) | Semantics and concept hierarchy, websites and Web pages | |

| Latent Dirichlet | Qiao et al. (2017) | Query logs | |

| allocation | Welch et al. (2010) | Semantics and concept hierarchy, search result snippets | |

| Hierarchical Bayesian | Nie et al. (2019) | Semantics and concept hierarchy | |

| Linguistics processing | Translation model | Ravi et al. (2010) | Advertising databases, websites and Web pages, query logs |

| Word sense | Scaiano &Inkpen (2011) | Semantics and concept hierarchy | |

| disambiguation | |||

| The relevance-based language model | Jadidinejad &Mahmoudi (2014) | Semantics and concept hierarchy | |

| Heuristics-based method | Scholz et al. (2019) | Query logs | |

| Machine | Random walk | Fuxman et al. (2008) | Query logs |

| learning | Decision tree | GM et al. (2011) | Websites and Web pages |

| Sequential pattern mining | Li et al. (2007) | Websites and Web pages | |

| Active learning | Wu et al. (2009) | Search result snippets | |

| Bayesian online learning | Schwaighofer et al. (2009) | Advertising databases | |

| Multi-step semantic | Zhang &Qiao (2018); | Semantics and concept hierarchy, | |

| transfer analysis | Zhang et al. (2021) | query logs | |

| Sequence-to-sequence learning | Zhou et al. (2019) | Query logs |

3.2.1 Co-occurrence Statistics

Section titled “3.2.1 Co-occurrence Statistics”Co-occurrence statistics count paired terms within a collection such as Web pages, taking Web advertising as an information retrieval problem. Notations used in co-occurrence statistics for keyword generation are presented in Table 3a.

Table 3a. Notations used in co-occurrence statistics for keyword generation

| Terms | Definition |

|---|---|

| 𝐾, 𝐾′ | A finite set of keywords/terms |

| ′ 𝑘, 𝑘 | A keyword/term |

| 𝑓𝑟𝑒𝑞(𝑘𝑖 , 𝑘𝑗) | The co-occurrence frequency of 𝑘𝑖 and 𝑘𝑗 |

| 𝐾(𝑡𝑓) | The top frequent keywords/terms |

| The current keyword/term | |

|---|---|

| ratio(k) | The ratio of the sum of the total number of terms in sentences including to the |

| total number of terms in the document | |

| The total number of keywords/terms in sentences including |

In keyword generation, the word co-occurrence matrix is constructed to get weighted keywords. The general setting of co-occurrence statistics is as follows. Given two finite sets of keywords K and K’, , , let denote the Cartesian product of K and K’, the co-occurrence statistics of a keyword pair is given by , which measures the frequency of co-occurrence of and .

Zhou et al. (2007) proposed a keyword extraction model where Web pages and advertisements are represented in a same data structure to support a retrieval process. In their model, nouns and verbs are extracted from the text of Web pages and the co-occurrence frequency between each term and top frequent terms is counted. Specifically, the term weight is calculated as

where K(tf) is the top frequent terms; k and are a term in K(tf) and the current term, respectively; is the co-occurrence frequency of k and ; is the total number of terms in sentences including ; ratio(k) is the ratio of the sum of the total number of terms in sentences including k to the total number of terms in the document.

A higher indicates that the current term (i.e., ) is more important in representing the document. Generally, co-occurrence statistics is used as a baseline in the research on keyword generation.

3.2.2 Similarity Measures

Section titled “3.2.2 Similarity Measures”In cases where two relevant keywords do not occur together, co-occurrence statistics may fail to generate right keywords. Moreover, a keyword may have more than one meaning (Chen et al., 2008), which makes it difficult to filter out generated keywords. Thus, similarity measures are developed to explore the characteristic of keywords. Notations used in similarity measures for keyword generation are presented in Table 3b.

Table 3b. Notations used in similarity measures for keyword generation

| Terms | Definition |

|---|---|

| A characteristic keyword vector | |

| W | A feature weighting function |

| The similarity between and | |

|---|---|

| doc | A document |

| DOC | A set of documents |

| quality(k’,k) | The quality of suggesting keyword for query |

| The TFIDF keyword vector for | |

| TF | The term frequency |

| IDF | The inverse document frequency |

| PMI(k) | A point-wise mutual information feature vector for keyword k |

| The pointwise mutual information between and a given keyword |

Specifically, each candidate keyword is represented as a characteristic keyword vector . Similarity between each pair of keywords is given as

(3)

where is a similarity measure, and one of the most popular possible instantiations of is the cosine; W is the feature weighting function. Term frequency-inverse document frequency (TFIDF) is commonly used as a weighting statistic, which is calculated as , where term frequency (TF) is the relative frequency of keyword k within document doc, i.e., , and the inverse document frequency (IDF) is the logarithmically scaled inverse fraction of DOC that contains k, i.e., .

A similarity graph can be constructed on the basis of the similarity between each pair of keywords, where nodes are keywords and the edges indicate similarities between keywords. Through traversing the similarity graph, a set of relevant but cheaper keywords can be generated (Joshi & Motwani, 2006; Abhishek & Hosanagar, 2007; Amiri et al., 2008; Thomaidou & Vazirgiannis, 2011).

In similarity-based keyword generation, one of common information sources is search result snippets. Given starting seeds, each keyword k is submitted as a query to a search engine to retrieve a set of characteristic documents , which is used to create a context vector for the input keyword and extract relevant keywords. For example, Joshi & Motwani (2006) characterized each input keyword using its text-snippets (i.e., words before and after the input keyword) document from the top 50 search-hits. The relevance of keyword k’ to k is measured by the frequency of k’ observed in the characteristic document of keyword k; then the directed relevance between keywords was used to construct a directed graph (i.e., TermsNet). TermsNet suggests keywords through ranking their qualities. Specifically, the quality of k’ for k is defined as quality(k’, k) =

, where each i is an outneighbor of k’, which helps identify relevant yet nonobvious terms and their semantic associations. Abhishek & Hosanagar (2007) built a mathematical formulation which measures semantic similarity between keywords using a Web-based kernel function. They scraped advertisers’ webpages, extracted and added keywords with high TFIDF into the initial dictionary, then created the context vector for each keyword. Given the TFIDF keyword vector for document , the context vector is the normalized centroid of . The semantic similarity kernel function is defined as the inner product of context vectors for two keywords. Through extracting keywords from the landing page as initial seeds, Thomaidou & Vazirgiannis (2011) entered the extracted seeds into a search engine and parsed the snippets and titles of search results to construct a characteristic vector for these keywords. Most occurrences inside the resulted documents are kept as the most relevant keywords for the seed query. The proposed method can generate keywords that do not explicitly show on the landing page.

Similarity-based methods by exploring sources such as search result snippets can help increase the precision of keyword generation and catch the trend of consumer behaviors. However, when constructing the characteristic vectors of keywords, most similarity-based methods with search result snippets favor the keywords with high co-occurrence frequency. This might lead an expensive cost for the generated keywords, because keywords with high co-occurrence frequency are typically popular. Moreover, keywords generated by exploring the top-hit search result snippets are expensive as well (Thomaidou & Vazirgiannis, 2011). Thus, similarity-based keyword generation with search result snippets may place advertisers into a highly competitive environment, thus can’t guarantee profit maximization.

To address this issue, researchers have explored semantic relationships between keywords with user-generated contents such as Wikipedia, DBpedia and manually-defined Web directories as valuable sources to generate non-obvious keywords (Amiri et al., 2008; Chen et al., 2008; Mirizzi et al., 2010). Wikipedia contains a large amount of clean information and conceptual knowledge on a wide spectrum of topics that can be utilized to suggest excessive long-tail keywords. Amiri et al. (2008) considered each query as an initial concept and tried to find related concepts from the Wikipedia collection. For each query keyword q, they grouped the retrieved documents by expectation maximization (EM) clustering algorithm and constructed a representative vector (i.e., keywords vector) for each cluster on

the basis of the TFIDF scheme. The representative vector contains related keywords/concepts and the relationship weights between these keywords and . Based on these vectors, a contextual graph was created to suggest a set of keywords/concepts that are more similar to the query keywords. DBpedia dataset is a community effort on the basis of Wikipedia to extract and store structured information in an RDF dataset that supports sophisticated queries. Mirizzi et al. (2010) presented a system to generate semantic tags through exploiting semantic relations from the DBpedia dataset. The similarity between each pair of resources in the DBpedia graph is calculated by querying external information sources (e.g., search engines and social bookmarking systems) and exploiting textual and link analysis in DBpedia. They used a hybrid ranking algorithm to rank keywords and expand queries formulated by users, and proved the validity of their algorithm by comparing with other RDF similarity measures (i.e., Algo2, Algo3, Algo4 and Algo5). By exploiting the semantic knowledge in the concept hierarchy built based on a manually-defined Web directory, Chen et al. (2008) proposed a keyword generation method. In more detail, it first matched a given seed keyword with one or several relevant concepts, and then these concepts were used together with the concept hierarchy to enrich the meaning of the seed keyword, finally a set of keywords was suggested by taking advantage of the conceptual information based on the similarity function of a variant of the Jaccard coefficient. However, similarity-based methods with semantic relationships suffer from the low concept coverage, and potentially lead to a decrease in the conversion rate, because low-cost keywords may be too far away from the initial seeds and thus fail to attract the target users.

Other sources such as co-bidding information and ads database have also been exploited in similarity-based keyword generation (Chang et al., 2009; Sarmento et al., 2009). Assuming that a set of bid keywords under the same ad is associated with a similar hidden intent, Chang et al. (2009) constructed a point-wise mutual information feature vector for each keyword () = (1 , 2 , … , ), where is the pointwise mutual information between keyword and co-bidded keyword . Then given an ad consisting of keywords, they ranked the suggested keywords in the ad network through summing the cosine similarity between the PMI feature vectors, i.e., (, ) = ((), ()) for = [1, … , ]. Similarly, assuming that advertisers associate inter-changeable keywords into the same ad, Sarmento et al. (2009) constructed a keyword synonymy graph by computing pairwise similarity between all co-occurrence vectors, and suggested relevant and non-obvious keywords by ranking candidate keywords through a function considering both the overlap and the average similarity. Through performing online comparisons of the proposed method with another keyword generation method used in the largest Portuguese Web advertising broker, they showed that advertisements containing keywords generated by the proposed method often have a superior performance in terms of click-through rate, which in turn resulted in a potential revenue increase.

3.2.3 Multivariate Models

Section titled “3.2.3 Multivariate Models”Keyword generation methods based on similarity measures are heavily confined to the local space defined by seed keywords in that they rely on statistical or semantic relationships between keywords. Moreover, in many cases, keywords generated by similarity-based methods might fail to capture search users’ real intents that are the most important to advertisers. To address these issues, multivariate models have been used to use rich latent information to facilitate keyword generation. Multivariate models can help advertisers generate a long tail of candidate keywords that are relevant and occupy a large fraction of the total traffic, by exploring potential factors and hidden topics affecting the performance of candidate keywords. Notations used in multivariate models for keyword generation are presented in Table 3c.

Table 3c. Notations used in multivariate models for keyword generation

| Terms | Definition |

|---|---|

| 𝑦 | A binary variable indicating whether a candidate keyword is relevant to a given |

| seed keyword | |

| 𝒙 | A vector of input features (𝑥̅) associated with a candidate keyword |

| 𝑤̅ | A weight learned for an input feature in 𝒙 |

| 𝑠𝑖𝑡𝑢𝑎𝑡𝑖𝑜𝑛 | Situation features specific to a targeted scene |

| 𝑐𝑜𝑢𝑛𝑡(𝑘, 𝑠𝑖𝑡𝑢𝑎𝑡𝑖𝑜𝑛) | The number of times that 𝑘 occurs in 𝑠𝑖𝑡𝑢𝑎𝑡𝑖𝑜𝑛 |

| 𝑐𝑜𝑢𝑛𝑡(𝑘) | The collection frequency of 𝑘 |

| 𝑐𝑜𝑢𝑛𝑡(𝑠𝑖𝑡𝑢𝑎𝑡𝑖𝑜𝑛) | The number of keywords in scenes mapped to 𝑠𝑖𝑡𝑢𝑎𝑡𝑖𝑜𝑛 |

| 𝑈 | A set of items (e.g., URLs) |

| 𝑹 𝐾 × 𝑈 | A keyword-item rating matrix |

| 𝑹𝑢 | The 𝑢-th column of 𝑹 𝐾 × 𝑈 |

| 𝑅𝑘,𝑢 | The rate of 𝑘 on item 𝑢 |

| 𝑸 | A binary column vector with 𝐾 -dimensions where it is equal to 1 if the keyword |

| on the 𝑘-th position is the seed keyword | |

| 𝒔𝒊𝒎 | A similarity vector |

| 𝝅𝑚 | A vector of indexed Web pages in the 𝑚-th iteration |

| (𝑚) 𝜋𝑖 | A score of the 𝑖-th Web page |

| 𝐺 | 1 A row-normalized adjacency matrix of the link graph, and 𝐺𝑗,𝑖 = 𝑜𝑖 |

| 𝑜𝑖 | The out-degree of 𝑖-th Web page |

|---|---|

| 𝚲 | A damping vector biased to a certain topic |

| 𝑪 | A content damping vector |

| 𝜗1 , 𝜗2 | Parameters controlling the impact of the content relevant score and the |

| advertisement relevant score | |

| 𝑔 | A row-normalized Wikipedia graph link 𝑛 × 𝑛 matrix |

| 𝑡𝑜𝑝𝑖𝑐 | A topic |

| 𝑛𝑡𝑜𝑝𝑖𝑐 | The number of topics |

| 𝑛𝑑 | The number of documents |

| (𝑡𝑜𝑝𝑖𝑐) 𝜑 | The topic distribution for document |

| (𝑘) 𝜑 | The keyword distribution for topic |

| (𝑡𝑜𝑝𝑖𝑐) (𝑘) 𝛽 , (𝛽 ) | Hyper parameters of Dirichlet distributions |

| 𝑘0 | A seed keyword |

| 𝑘0 . 𝐶𝑎𝑛𝑑 | The set of candidate keywords for 𝑘0 |

| 𝐼 | A set of keywords consisting of 𝑘0 and 𝑘0 . 𝐶𝑎𝑛𝑑 |

| 𝑘𝑖 . 𝐴𝐾 | A list of associative keywords of 𝑘𝑖 |

| 𝑘𝑖 . 𝑃𝑟𝑜𝑓𝑖𝑙𝑒 | A corresponding characteristic profile of 𝑘𝑖 |

| 𝑘. 𝑣𝑜𝑙 | The search volume of 𝑘 |

| 𝐶𝑜𝑟𝑝𝑢𝑠 | All characteristic profiles of keywords in 𝐼 |

| 𝜂𝑗 | The total times that the title keyword doesn’t appear in the 𝑗-th component of a |

| Wikipedia article | |

| 𝛼𝑗 | The importance of a component where a given keyword appears |

| 𝜃𝑗 | A random variable obeying an Beta distribution, denoting the unimportance of a |

| component, i.e., 𝜃𝑗 = 1 − 𝛼𝑗 | |

| ′ ′ 𝛼𝑗 , 𝛽𝑗 | The shape parameters for the Beta distribution of 𝜃𝑗 |

| 𝐾𝑊𝑊(𝑘) | The weight for a given keyword |

| (𝑘) 𝑇𝐹𝐼𝐷𝐹𝑠 | The importance of a keyword presented in the abstract |

| (𝑘) 𝑇𝐹𝐼𝐷𝐹𝑐 | The importance of a keyword presented in the content |

| (𝑘) 𝑇𝐹𝐼𝐷𝐹𝑑 | The importance of a keyword presented in the main text |

| 𝑇𝐹𝐼𝐷𝐹𝑖 (𝑘) | The importance of a keyword presented in the information box |

| 𝐴𝑇(𝑘) | A variable indicating whether a keyword is in the anchor text |

In the literature on keyword generation, five multivariate models, namely logistic regression, collaborative filtering, topic-sensitive PageRank, latent Dirichlet allocation and hierarchical Bayesian have been employed to explore effects of various keyword characteristics in the generation process.

(1) Logistic regression (LR). LR is a basic learning technique which treats keyword generation as a binary classification problem. LR learns a vector of weights for input features and returns the estimated probability of whether a candidate phrase is relevant (Yih et al., 2006; Wu & Bolivar, 2008; Berlt et al., 2011; Lee et al., 2009). Typically, LR is used in keyword generation in the following form:

(4)

where y is a binary variable, whose value is equal to 1 if the candidate keyword is relevant, otherwise 0; x is a vector of input features associated with a candidate keyword; and is a weight that the LR model learns for an input feature in x. The generated keywords are ranked by the estimated probability.

LR was used in finding advertising keywords from Web pages by Yih et al. (2006). Specifically, it takes y as a binary variable under the monolithic selector; while under the decomposed selector, a phrase is decomposed into individual words and each word is tagged with one of the five labels (i.e., beginning, inside, last, unique and outside), and then five estimated possibilities are returned, i.e., . The probability of a phrase is calculated by multiplying the five probabilities of its individual words being the correct label of the sequence. Experiments illustrated that LR significantly outperforms baseline methods (e.g., the TFIDF model, an extended TFIDF model with learned weights and a domain-specific keyword extraction method).

Subsequent studies used LR for different advertising scenarios. Following Yih et al. (2006), Wu & Bolivar (2008) added HTML features and proprietary data features (e.g., leaf and root category entropy) for the particular website (i.e., eBay) in the LR model, and constructed a candidate category vector for each keyword to resolve the keyword ambiguity problem. In order to find relevant keywords from online video contents, Lee et al. (2009) took into account not only within-document term features (e.g., TF-IDF scores), but also situation features specific to a targeted scene to train a LR model, i.e., pointwise mutual information (PMI) based on the co-occurrence information between a term k and situation situation, , where count(k, situation) is the number of times that k occurs in situation situation, count(k)and count(situation) are the collection frequency of k and the number of words in scenes mapped to situation, respectively. Experiments showed that the scene-specific features are potentially useful to improve the performance of keyword extraction in video advertising. Instead of directly asking humans to evaluate the relevance of candidate keywords, Berlt et al. (2011) reduced the training cost of the LR-based keyword generation model by taking experts’ evaluation on the relevance of advertisements for the page where the keyword candidate is extracted from. Experiments showed that their ad-collection-aware approach could yield significant gains without dropping precision values. However, the LR-based methods can only generate keywords from particular pages, while missing

information from the similar pages. Besides websites and Web pages, advertisement databases and search click logs have been used by Bartz et al. (2006) to examine the performance of logistic regression, and results showed that biddedness data in advertisement databases can provide better precision.

(2) Collaborative filtering (CF). CF is a classic recommendation algorithm. Suppose that there are a keyword set K and an item (e.g., URL) set U. Following Bartz et al. (2006), the keyword-item rating matrix in keyword generation is given as

(5)

where denotes the rate of keyword k on item u. Each keyword can be represented as a |U|-dimensional vector and the similarity between two rating vectors can be measured with the cosine similarity

(6)

Then the keyword-item rating matrix could be converted to a keyword-term similarity matrix based on the cosine similarity, and the Top-k most similar keywords are ranked for keyword generation. Specifically, keywords and URLs in the search logs are extracted to construct a term-URL rating matrix whose rate is the number of times that a user searched for that keyword and clicked on that URL; then a column vector with |K|-dimensions was created, whose value is 0-1 binary, where it is equal to 1 if the keyword in the k-th position is the seed keyword. The similarity vector was calculated as , where is the u-th column of matrix , and is an 0-1 binary indicator vector with |K|-length and contains 1 for every non-zero entry of . Finally, the keywords were ranked in descending order of indexes in .

Based on advertisement databases and search click logs, Bartz et al. (2006) examined the performance of a CF model with respect to generating relevant keywords, starting from a set of seed keywords describing an advertiser’s products or services, and found that the standard collaborative filtering framework has statistically equal performance with the logistic regression with a set of selected features.

(3) Topic-sensitive PageRank (TSPR). Topic-sensitive PageRank extends PageRank by

allowing the iteration process to be biased to a specific topic. The main idea of the PageRank algorithm is to propagate the quality score of a Web page to its out-links and obtain the static quality of Web pages by performing a random walk on the link graph. Following Haveliwala (2003), the topic-sensitive PageRank algorithm is defined as

\boldsymbol{\pi}_{m+1} = (1 - \vartheta)\boldsymbol{\Lambda} + \vartheta G \boldsymbol{\pi}_m, \tag{7}

where = [1 () , … , () is a vector of the indexed Web pages in the -th iteration, () is the score of the -th Web page; the matrix is a row-normalized adjacency matrix of the link graph, and , = 1 , is the out-degree of the -th Web page; is the damping vector biased to a certain topic, where -th element in is the relevance of the -th Web page to the topic.

The propagation process of the topic-sensitive PageRank algorithm is biased by the damping vector in each iteration. After iterations, Web pages with higher damping values propagate higher scores to their neighbors in the link graph.

In order to simultaneously generate keywords valuable for advertising from short-text Web pages, Zhang et al. (2012a) combined the content bias and advertisement bias into the propagation process of topic-sensitive PageRank, given as

\mathbf{R}_{m+1} = \vartheta_1 \mathbf{C} + \vartheta_2 \mathbf{A} + (1 - \vartheta_1 - \vartheta_2) g \mathbf{R}_m, \tag{8}

where is the content damping vector (i.e., the vector of the relevance between a set of Wikipedia entities and the target Web page) obtained through a regression based on Yih et al. (2006), and is the advertisement damping vector obtained through calculating the frequency of each entity in the text of an advertisement; 1 and 2 are the parameters controlling the impact of the content relevant score to the content-sensitive PageRank value and the advertisement relevant score to the advertisementsensitive PageRank value, respectively; is the row-normalized Wikipedia graph link × matrix, where stands for the size of the entity set. The TSPR-based keyword generation method can generate highly relevant keywords that don’t occur on the target Web page, and can yield a significant improvement in precision over baseline methods (i.e., TF counting, supervised learning).

(4) Latent Dirichlet allocation (LDA). Users’ search intentions can be captured timely through exploring users’ query topics hidden in query logs, which are valuable for commercial advertising and helpful in keyword generation (Qiao et al., 2017). Latent Dirichlet allocation (LDA) is a generative probabilistic model for a collection of documents, assuming that each document is represented as

random mixtures over latent topics and each topic is characterized by a distribution over words (Blei et al., 2003).

Given a corpus consisting of topics over documents, and each document contains keywords, let and denote the topic (topic) distribution for document and the keyword (k) distribution for topic, respectively. Both and obey Dirichlet distributions with hyper parameters and . Given the seed keyword , Qiao et al. (2017) generated a set of candidate keywords by analyzing indirect associations between and keywords in query logs. Let I denote a keyword set consisting of seed keyword and candidate keywords , i.e., . Each keyword has a list of associative keywords , and the list of associative keywords constitutes a characteristic profile for , i.e., , where k is a keyword in and k.vol is its search volume. Taking each characteristic profile as a document, all characteristic profiles of keywords in I can be collected as a corpus, which is denoted as .

In keyword generation, LDA interprets the characteristic profile of (i.e., ) as a multinomial distribution over a set of topics and each topic is assigned a multinomial distribution over keywords in Corpus. Then the generation probability of a keyword k in the Corpus can be obtained through a process that samples a topic (topic) from specific with a characteristic profile and subsequently samples a keyword (k) from associated with topic, which is formulated as

Dir(\varphi^{(k)}|topic,\beta^{(k)}) d\varphi^{(k)}. \tag{9}

The sampling process discussed above is repeated k.vol rounds for each keyword in a characteristic profile. Then the generation probability of the observed Corpus can be obtained by repeatedly applying the sampling process to all the characteristic profiles, which is given as

(10)

Then Gibbs sampling was used to estimate parameters () and () as well as the latent variable by maximizing (| () , () ) . After the parameter estimation, each keyword can be projected into a topic distribution.

Considering the fact that two keywords related to similar topics might be competitive with each other due to market overlaps, Qiao et al. (2017) utilized topic distributions of keywords in query logs to infer competitive relationships between keywords. The mined topic structure is further combined into a factor graph model to extract a set of competitive keywords. The LDA-based method can generate competitive keywords which rarely co-occur with the seed keyword in queries.

On video sites with user-generated clips (e.g., YouTube), the text data is typically short. A video comprises a small number of hidden topics, which can be represented as keyword probabilities. Thereby, a video’s text can be generated from some distribution over those topics. Welch et al. (2010) explored an LDA-based keyword generation method for video advertising by mining a range of short-text sources associated with videos. Compared to statistical n-gram keyword generation methods, the LDA-based method performed better when a limited amount of text data is available, and can substantially improve the matching relevance and the profitability of generated keywords.

(5) Hierarchical Bayesian (HB). HB is a statistical model formed in multiple hierarchical structures which can be used to estimate the harmonic parameters in the keyword weighting formula for keyword generation. Given that a set of articles has been randomly selected from the corpus (e.g., Wikipedia articles), HB calculates the weight based on the importance of a keyword from the components of the Wikipedia article. Let be the total times that the title keyword doesn’t appear in the -th component, and be a random variable obeying a Beta distribution, i.e., ~( ′ , ′ ), based on the sample data . Following Nie et al. (2019), the posterior joint probability distribution ( , ′ , ′ |) is

p(\theta_j, \alpha'_j, \beta'_j | \eta_j) = \frac{p(\eta_j | \theta_j, \alpha'_j, \beta'_j) p(\theta_j | \alpha'_j, \beta'_j) p(\alpha'_j, \beta'_j)}{p(\eta_j)} \propto p(\eta_j | \theta_j, \alpha'_j, \beta'_j) p(\theta_j | \alpha'_j, \beta'_j) p(\alpha'_j) p(\beta'_j). \tag{11}

By using HB, the weight of each keyword extracted from articles in the corpus can be calculated and used to decide its priority in the candidate set. Nie et al. (2019) presented a keyword generation method taking advantage of the rich link structure of Wikipedia’s entry articles. Starting with a few seed keywords, the proposed method generates keywords in an iterative way, until a threshold is reached which balances the tradeoff between coverage and relevance of the generated keyword set. In the keyword generation process, the weight of a keyword in an article was calculated as () = 1() + 2() + 3() + 4() + 5 |()| , where () is the weight for a given keyword; (), (), () and () measure the importance of a keyword occurring in abstract, content, main text, information box, respectively; |()| indicates whether a keyword is in the anchor text; and ( = 1 − , = 1, ⋯ ,5) is the importance of a component where the keyword appears, which can be estimated by using the hierarchical Bayesian keyword weighting method. The HB-based method performs at a superior level with respect to both coverage and relevance.

3.2.4 Linguistics processing

Section titled “3.2.4 Linguistics processing”Multivariate methods are incapable of generating semantically relevant keywords that don’t contain or co-occur with the seed keywords. Moreover, multivariate methods suffer from the problem of topic drift which might generate keywords with little clicks and conversions and thus produce a massive overhead. Thus, more syntactic and semantic analysis are needed to capture users’ real search intentions in keyword generation. Keyword generation based on linguistics processing helps find more semantically related and profitable keywords. Notations used in linguistics processing models for keyword generation are presented in Table 3d.

Table 3d. Notations used in linguistics processing models for keyword generation

| Terms | Definition |

|---|---|

| 𝒌 | 𝒌 = {𝑘(1) , 𝑘(2) , … , 𝑘(𝑛) }, denoting the bags of words of keyword 𝑘 |

| 𝑙𝑎𝑛𝑑𝑖𝑛𝑔 | A landing page |

| 𝒍𝒂𝒏𝒅𝒊𝒏𝒈 | 𝒍𝒂𝒏𝒅𝒊𝒏𝒈 = {𝑙𝑎𝑛𝑑𝑖𝑛𝑔(1) , 𝑙𝑎𝑛𝑑𝑖𝑛𝑔(2) , … , 𝑙𝑎𝑛𝑑𝑖𝑛𝑔(𝑚) }, denoting the bags of words |

| of 𝑙𝑎𝑛𝑑𝑖𝑛𝑔 | |

| 𝑡𝑟𝑎𝑛𝑠𝑙𝑎𝑡𝑖𝑜𝑛 | A translation table denoting the likelihood of 𝑙𝑎𝑛𝑑𝑖𝑛𝑔(𝑗) being generated from 𝑘(𝑖) |

| (𝑙𝑎𝑛𝑑𝑖𝑛𝑔(𝑗) 𝑘(𝑖)) | |

| 𝑤𝑗 | A weighting parameter for 𝑙𝑎𝑛𝑑𝑖𝑛𝑔(𝑗) |

| 𝑚𝑎𝑝𝑝𝑖𝑛𝑔 | A mapping from word(s) to sense(s) |

| 𝑆𝑒𝑛𝑠𝑒𝑠𝐷𝐼𝐶(𝑘𝑖) | The set of senses encoded in a dictionary 𝐷𝐼𝐶 for 𝑘𝑖 |

| 𝑞 | A query keyword |

| 𝒒 | 𝒒 = {𝑞(1) , 𝑞(2) , … , 𝑞(𝑛)}, denoting a set of query keywords after sampling 𝑛 times |

In the following, we go through four linguistics processing methods in keyword generation, namely, translation model, word sense disambiguation, relevance-based language models and heuristics-based methods.

(1) Translation model (TM). Translation model is originally presented in statistical machine translation literature to translate text from one type of natural languages to another (Brown et al., 1993). In keyword generation, translation model learns translation probabilities from keywords to landing pages through a parallel corpus, and bridges the vocabulary mismatch by giving credits to words in a phrase that are relevant to the landing page but do not appear as part of it. By treating keywords and landing page as bags of words, i.e., = {(1) , (2) , … , () } and = {(1) ,(2) , … , () } , for each () ∈ , the probability of the translation probability from the keyword to the landing page (|) can be estimated as

(|) ∝ ∏ ∑ (() |()), (12) where (() |()) is the translation table, i.e., the probability that characterizes the likelihood of a word in a landing page being generated from a word in a keyword.

Ravi et al. (2010) proposed a keyword generation method by combining a translation model with language models to produce highly relevant well-formed phrases. Specifically, the probability (|) was modified by associating a weight for all () ∈ with respect to different features (e.g., a higher weight for words with HTML tags), i.e., (|) ∝ ∏ (∑ (() |())) , then the keyword language model () was instantiated with a bigram model capturing most of useful co-occurrence information and smoothed by a unigram model. Based on the Bayes’ law, the probability (|), i.e., the likelihood of a keyword given the landing page, can be estimated to rank the keywords, i.e., (|) ∝ (|) P(). Experiments based on a realworld corpus of landing pages and associated keywords showed that the TM-based method outperformed significantly over a method based purely on text extraction, and could generate many human-crafted keywords.

(2) Word sense disambiguation (WSD). WSD is the ability in computational linguistics to identify which sense (meaning) of a word is used in a particular context (Navigli, 2009). Through viewing a text as a sequence of words (1, 2, … , ), WSD can be defined as a task of assigning the appropriate sense(s) to word(s) in the text, i.e., to identify a mapping () from word(s) to sense(s), such that () ⊆ (), where () is the set of senses encoded in a dictionary for word , and () is that subset of the senses of which are appropriate in the text. In online advertising and SSA, in order to exclude the display of an advertisement from a population of non-target audiences, it is necessary to identify negative keywords. For example, Scaiano & Inkpen (2011) proposed a method to automatically identify negative keywords, using Wikipedia as a sense inventory and an annotated corpus. Specifically, they searched all links containing the seed keywords and collected all destination pages to find all senses for each keyword, then generated context vectors for each sense by tokenizing each paragraph containing a link to the sense being considered. In this process, all words were recorded and counted as a dimension in the context vector. After identifying the intended sense and creating a broad-scope intended-sense list, negative keywords are identified through finding words from the context vectors of the unintended senses with high TFIDF. The WSD-based method could find keywords strongly correlated with negative topics and improve the performance of advertising campaigns.

(3) The relevance-based language (RBL) model. The RBL model is used to determine the probability of observing a keyword in a collection of documents, i.e., (|) (Lavrenko & Croft, 2017). Given a query keyword and a large collection of documents (), both and each document are represented as a sequence of words. Each document in is related to and there is no training data about which document in is related to . The relevance model is referred to the underlying mechanism that determines the probability (|) . We assume that both and documents related to can be sampled from , but possibly by different sampling processes. After sampling times, we can observe query keywords = {(1) , (2) , … , ()}. Thus, given such observations, we can estimate the conditional probability of observing as

(13)

Jadidinejad & Mahmoudi (2014) proposed a keyword generation method based on a modified relevance-based language model, with Wikipedia as the knowledge base. First, by capitalizing Wikipedia’s disambiguation pages, different semantic groups for a given ambiguous query were extracted. Second, appropriate semantic groups were selected based on user’s intent, and an initial list of candidate keywords were generated by tracking bidirectional anchor links. Third, given a seed query and a collection of documents corresponding to candidate keywords from Wikipedia, the relevancebased language model was applied to estimate the probability of observing a keyword in documents, which helped measure the relevance between candidate keywords to the seed query. The RBL-based method is language independent, well-grounded with expert keywords and computationally efficient.

(4) Heuristics-based method. Consumers with a high conversion probability tend to use an online store’s internal search engine (Ortiz-Cordova et al., 2015). Scholz et al. (2019) proposed a heuristicsbased method to extract keywords from an online store’s internal search logs. Specifically, a set of candidate keywords identified from the internal search and other sources (e.g., Thesauri) was enriched with other keyword generation tools and filtered according to the monthly search volume in Google, then keywords whose internal search volumes are higher than a predefined threshold were included into the target set. This heuristics-based method can substantially increase the number of profitable keywords and the conversion rate, and in the meanwhile, decrease the average cost per click.

3.2.5 Machine learning

Section titled “3.2.5 Machine learning”The performance of linguistics processing is influenced by the quality of sources (e.g., Web pages, texts, dictionaries), which might make suggested keywords deviate from search users’ real intentions. Machine learning can automatically learn and produce more accurate and reliable results by consuming a large amounts of data accumulated in online advertising, executing feature engineering without little interference of humans and capturing more rich behavioral information and structural relationships with high-order representation. Thus, machine learning models can generate large sets of relevant keywords by capturing the intention of users and processing the up-to-date information sources. Notations used in machine learning models for keyword generation are presented in Table 3e.

Table 3e. Notations used in machine learning models for keyword generation

| Terms | Definition |

|---|---|

| 𝜉𝑛 | A random variable describing the position of random walk after 𝑛 steps |

| 𝓌𝑛 | The 𝑛-th step of a random walk |

| 𝑐̃ | A concept that an advertiser is interested in |

| 𝑙𝑘 | The random variable related to a concept for query keyword 𝑘 |

| 𝑙𝑢 | The random variable related to a concept for URL 𝑢 |

| 𝒯 | The probability of transiting to the absorbing null class node |

| 𝐷 | Dataset |

| 𝑛̂ | A node in decision tree |

| ′ 𝑛̂ | A child node of 𝑛̂ |

| 𝐶(𝑛̂ Υ) | A set of child nodes of 𝑛̂ |

| 𝐷𝑛̂ | A subset of dataset 𝐷 at node 𝑛̂ |

| Υ | The branching criterion |

| 𝐺(𝑛̂, Υ) | The quality of the partition of 𝐷𝑛̂ induced by Υ |

| 𝑥Υ | An element of 𝒙 |

| 𝒳Υ | A set of unique values of categorical variable 𝑥Υ |

| 𝒳Υ,𝑘 | The 𝑘-th value of 𝒳Υ |

| 𝒳Υ | The number of values in 𝒳Υ |

|---|---|

| 𝐻 | The entropy |

| 𝑠𝑒𝑞, 𝑠𝑒𝑞′ | A sequence |

| ′ 𝑠𝑒𝑞𝑖 (𝑠𝑒𝑞𝑖 ) | The 𝑖-th element in sequence 𝑠𝑒𝑞(𝑠𝑒𝑞′ ) |

| 𝑠𝑒𝑞_𝑖𝑑 | The id of sequence 𝑠𝑒𝑞 |

| 𝑠𝑒𝑞_𝑝𝑎𝑡𝑡𝑒𝑟𝑛 | A sequential pattern |

| 𝑲𝑇 | 𝑇 The union of the keyword matrix [𝒌𝑇,1 , … , 𝒌𝑇,𝑛] and the target dataset {𝒌𝑇,𝑖 } |

| 𝑲𝐿 | 𝑇 The union of the keyword matrix [𝒌𝐿,1 , … , 𝒌𝐿,𝑚] and {𝒌𝐿,𝑖 }, i.e., a subset of 𝑲𝑇 |

| that is chosen to be labeled | |

| 𝜖𝑖 | The measurement error |

| 𝑓𝑇 | 𝑇 𝑓𝑇 = [𝑓(𝑘𝑇,1), … , 𝑓(𝑘𝑇,𝑛)] , denoting the function values on all the available data |

| 𝐾𝑇 | |

| 2 𝜎 | The variance of a distribution |

| 2𝑪𝑓 𝜎 | A covariance matrix |

| 𝑠𝑖𝑘 | A binary parameter indicating whether the 𝑖-th ad is subscribed with the keyword 𝑘 |

| 𝒞𝑖 | A binary variable indicating whether the 𝑖-th ad belongs to category 𝑗 |

| 𝜇𝑗𝑘 | The mean of the prior distribution for the keyword subscription probability |

| 𝑟̃𝑖𝑗 | The probability that the 𝑖-th ad belongs to category 𝑗 |

| 𝑐𝑎𝑛𝑑𝑘𝑛−1 | A candidate set of keywords with one-step relevance to 𝑘𝑛−1 |

| (𝑘𝑖 ) 𝑅1 , 𝑘𝑖+1 | The one-step relevance of keyword pair (𝑘𝑖 , 𝑘𝑖+1 ) |

| 𝑅𝑛 | 𝑅𝑛 (𝑘0 , 𝑘 𝑘1 , 𝑘2 , … , 𝑘𝑛−1 ), denoting the n-step relevance of 𝑘0 and 𝑘 via the |

| intermedia keywords 𝑘1 , 𝑘2 , … , 𝑘𝑛−1 | |

| 𝑯 | 𝑯 = (𝒉1 , 𝒉2 , … , 𝒉𝑛), denoting hidden representations |

| 𝒆(𝑘𝑡) | The embedding of keyword 𝑘𝑡 |

| 𝒄𝒕𝑡−1 | A context vector |

| 𝒔𝑡 | A state vector |

| 𝐾̃ | (𝑘̃ , 𝑘̃ , … , 𝑘̃𝑚), denoting a generated target keyword sequence 𝐾̃ = 1 2 |

| 𝑑𝑜𝑚𝑎𝑖𝑛𝑘, 𝑑𝑜𝑚𝑎𝑖𝑛𝑘̃ | The corresponding domain categories of 𝐾 and 𝐾̃ |

In the literature, researchers have investigated several statistical learning methods to facilitate keyword generation in SSA, including random walk, decision tree, sequential pattern mining, active learning, Bayesian online learning, multi-step semantic transfer analysis and sequence-to-sequence learning.

(1) Random walk (RW). RW can be used to describe a keyword generation path including a succession of random steps in the query-click graph extracted from search logs. In essence, clicks represent a strong association between queries and URLs (Yang & Zhai, 2022). Hence, advertisers can exploit the proverbial “wisdom of the crowds” to reconstruct query-click logs as a weighted bipartite graph (,,). Specifically, query keywords in and URLs in constitute the partitions of the graph, and the number of times that users issued query k to the search engine and clicked on URL u (i.e., ) can be regarded as weight on the edge . Suppose that is a sequence of independent and identically distributed random variables, a random walk is a random process which describes a path consisting of a succession of random steps on some mathematical space (Xia et al., 2019). It can be denoted as , where is a random variable describing the position of random walk after n steps and , where denotes the step of the random walk.

Fuxman et al. (2008) formulated the keyword generation problem within a framework of Markov Random Fields and developed an RW-based algorithm with absorbing states to traverse a query-click graph. Given a concept that an advertiser is interested in, a seed set of URLs relevant to was constructed manually, which can be regarded as the representation of . Let and denote the random variable related to for query keyword k and URL u, respectively. The probability that a random walk starting from query k will be absorbed at concept , i.e., , can be computed as

P(l_k = \tilde{c}) = (1 - T) \sum_{u:(k,u) \in R} \frac{R_{k,u}}{\sum_{u:(k,u) \in R} R_{k,u}} P(l_u = \tilde{c}), \tag{14}

where is the probability of transiting to the absorbing null class node. The null class node is a node in a query-click graph whose probability (i.e., or ) is below a threshold, and the set of null class nodes define the boundary of the query-click graph. Similarly and recursively, for all URLs in the seed set, ; for other URLs (i.e., unlabeled URL) in search logs, the probability of a random walk that starts from URL u and ends up being absorbed in concept , i.e., , can be computed as

(15)

The processes defined by and iterated alternately until the convergence. Discarding and whose probabilities lower than a predefined threshold, we can obtain , the probability that query k belongs to seed concept , for every query in the set K. we can obtain , i.e., the probability that k will be absorbed at , for every query keyword in search logs, which can be regarded as the relevance between k and . The RW-based can generate a large amount of high-quality keywords with minimal effort from advertisers.

(2) Decision tree (DT). DT is a flowchart-like structure where paths from the root to leafs represent classification rules. Given a dataset with x being a feature vector of keywords

and y being the label of relevance, DT represents a recursive partition of D such that (a) each node of the tree stores a subset of D with the root node storing D, (b) the subset at node is the union of the mutually disjoint subsets stored at its child nodes , i.e., forms a partition of where denotes the set of child nodes of , and (c) the partition is determined by a branching criterion Y. The optimal DT is built by recursively identifying the locally optimal branching criterion at each node starting from the root node while subjecting to some stopping as well as pruning criteria. Specifically, at node , the optimal branching criterion is

\theta_{\hat{n}}^* = argmax_Y \ G(\hat{n}, Y), \tag{16}

where measures the quality of the partition of induced by Y.

In the decision tree-based scheme, GM et al. (2011) developed a keyword generation approach to learn the website-specific hierarchy from the (Web page, URL) pairs of a website, and keywords are populated on nodes of the induced hierarchy via successive top-down and bottom-up iterations. Human evaluations showed that their method outperformed previous approaches by Broder et al. (2007) and Anagnostopoulos et al. (2007) in terms of relevance. In keyword generation, as specified by GM et al. (2011), an instance (x, y) corresponds to a Web page, where x is a vector of features extracted from the URL of the Web page, and y is the cluster of Web pages with similar contents. As an example, consider a Web page with the URL “www.examplewear.com/exampleshop/product.php?view=detail& group = shoes & dept = men”, from which four features can be extracted, i.e., (the name of the php script), =“detail” (the value of argument “view”), =“shoes” (the value of argument “group”) and = “men” (the value of argument “group”). Each element of x, i.e., , is a categorical variable having a set of unique values denoted by . For example, “women”, “outlet”, “accessories”}, which is constructed by going through all URLs and collecting values of the “dept” argument. For convenience, let denote the k-th value of assuming an arbitrary order, and denote the number of values in . The branching criterion is to select a feature , according to which is partitioned. The quality of the resulting partition is measured with the gain ratio metric, specified as follows.

G(\hat{n}, Y) = \frac{H(y|D_{\hat{n}}) - \sum_{k=1}^{|\mathcal{X}_{Y}|} P(x_{Y} = \mathcal{X}_{Y,k}|D_{\hat{n}}) H\left(y|D_{\left(\hat{n}, \mathcal{X}_{Y,k}\right)}\right)}{H(x_{Y}|D_{\hat{n}})}, \tag{17}

where is the estimated probability of having value on feature dimension i for a data instance in , is the entropy of labels in measuring how impure (diversified)

Web pages in are in terms of assigned clusters, is the entropy of labels in , and is the entropy of feature Y in measuring the complexity of the partition. The gain ratio metric measures how much impurity (content dissimilarity) reduction can be achieved through a data space partition, and favors partitions with high impurity reduction but low partition complexity, which is helpful in preventing overfitting.

(3) Sequential pattern mining (SPM). SPM aims to find frequent patterns from a set of sequences (Mabroukeh & Ezeife, 2010), which is used to find keywords in online broadcasting contents. Given a set of items (e.g., terms) , two sequences and , where ( ) is a subset of items K, if there exist integers making , , …, , seq’ is called a subsequence of seq, i.e., . Given a sequence dataset D, i.e., a set of tuples , where seq is a sequence and is the id of seq, the support of a subsequence is the number of tuples in the dataset containing seq’, given as

support_D(seq') = |\{\langle seq\_id, seq \rangle | (\langle seq\_id, seq \rangle \in D)^{\wedge} (seq' \in seq)\}|. \tag{18}

Given a positive integer as the support threshold, a sequence seq’ is called a sequential pattern if .

In keyword generation from online community contents, Li et al. (2007) used sequential mining to discover language patterns (i.e., a sequence of frequent words around an extracted keyword). The Web has become a communication platform, where users spend a large amount of time on broadcasting and interactions with others in online communities, e.g., blogging, posting, chatting, etc. Online contents are composed of specific keywords, phrases and wordings associated with frequently changed topics in communities. Keywords are extracted once a sentence is matched with a pattern from online broadcasting contents and scored with the sum of the confidence of matched patterns, i.e., , where is the remaining part of sequential pattern after is removed. The process of sequential pattern mining and keyword extraction iterates and eventually generates a large number of keywords. Experiments showed that the proposed approach can find meaningful language patterns and reduce the cost of manual data labeling, compared with traditional

statistical approaches that considered each word individually.

(4) Active learning (AL). AL is a special type of machine learning where a learning algorithm actively queries users (or some information sources) to label new data points with the desired outputs under situations where unlabeled data is abundant but manual labeling is expensive. Transductive Experimental Design is an active learning approach which can be used to select candidate keywords (for labeling and training) that are hard to predict and representative for unlabeled candidates. Let denote the union of the keyword matrix and the target dataset , and denote the union of the keyword matrix and a subset of that is chosen to be labeled (i.e., ). Define as the output function learned from the measure , i = 1, …, m, where is the weight vector, is measurement error and (label) is the binary relevance score. Let be the function values on all the available data . Then the predictive error has the covariance matrix with

\boldsymbol{C}_f = \boldsymbol{K}_T (\boldsymbol{K}_L^T \boldsymbol{K}_L + \mu \boldsymbol{I})^{-1} \boldsymbol{K}_T^T. \tag{19}

The total predictive variance on the complete data set is given as

(20)

The objective is to find a subset which can minimize the total predictive variance.

Users’ relevance feedback is another type of valuable information source for profitable keyword generation. Wu et al. (2009) proposed an efficient interactive model based on an active learning approach called transductive experimental design using relevance feedback for keyword generation in SSA. Each keyword was represented using a characteristic document consisting of top-hit search snippets for a seed keyword. In a seed’s characteristic document, top-n weighted terms were recommended as candidate keywords. The AL-based method could significantly improve the relevance of generated keywords.

(5) Bayesian online learning (BOL). Bayesian online learning replaces the true posterior distribution with a simple parametric distribution, and defines an online algorithm by a repetition of two steps (i.e., an update of the approximate posterior when a new sample arrives and an optimal projection into the parametric family) (Opper & Winther, 1999). BOL is helpful to improve the computational efficiency when estimating the unknown variables based on a large data. In SSA, advertisers use a set of keywords to describe an advertisement. Let K be the set of subscribed keywords

in the i-th ad: if the i-th ad is subscribed with keyword , then , else . Assuming that the keyword vector of an ad is sampled from one or several ad categories, such as automobiles and travel, let denote the probability that the i-th ad is subscribed by k when it belongs to category j. Then under the scheme of Bayesian online learning, each data point of ads is processed at a time, and the posterior distributions of the probability obtained after processing a data point are passed as the prior distributions for processing the next data point. Keywords can be generated to an advertiser based on keyword subscriptions of other advertisers. The probability of an unobserved keyword that is implicitly related to the i-th ad can be given as

p(s_{ik'} = 1 | \{s_{ik}\}_{k \in K}) = \sum_{i=1}^{n} \tilde{r}_{ii} \mu_{jk}, \tag{21}

where is the probability that the i-th ad belongs to category j, and is the mean of prior distribution for the keyword subscription probability .

Schwaighofer et al. (2009) provided an efficient Bayesian online learning algorithm to group advertisements into categories and applied the BOL algorithm to generate keywords. Experiments based on two advertisement datasets showed that the BOL-based algorithm is suitable for large scales of data streams because of its low computational cost.

(6) Multi-step semantic transfer analysis (MTSTA). The MTSTA-based keyword generation can yield keywords based on both their direct and indirect relevance to the seed keywords via semantic transfer. Given a seed keyword , for keyword k, if there exist n-1 intermedia keywords satisfying the conditions that k is in the candidate set of (i.e., ), is in the candidate set of (i.e., ), then the n-step relevance of and k via the intermedia keywords , can be defined as

(22)

where , , are the one-step relevance of keyword pairs.

The MTSTA-based keyword generation finds keywords with multi-step relevance that is no less than a certain threshold in the query logs (Zhang & Qiao, 2018; Zhang et al., 2021). In order to explore keywords with indirect relevance, Zhang and his colleagues explored a MTSTA-based keyword generation method by iteratively conducting co-occurrence analysis to form a hierarchal multi-step relevance tree, and developed a pruning strategy to reduce the computational consumption in generating the transfer paths.

(7) Sequence-to-sequence learning (Seq2Seq). The encoder-attention-decoder framework based on Seq2Seq learning is an end-to-end approach to sequence learning that makes minimal assumptions on the sequence structure (Sutskever et al., 2014). In keyword generation, the encoder represents an input keyword sequence with hidden representations , i.e.,

\boldsymbol{h}_t = GRU(\boldsymbol{h}_{t-1}, \boldsymbol{e}(k_t)), \tag{23}

where GRU is gated recurrent unit (Chung et al., 2014) and is the embedding of keyword .

The decoder updates state as follows:

\mathbf{s}_{t} = GRU(\mathbf{s}_{t-1}, [\mathbf{ct}_{t-1}; \mathbf{e}(\tilde{k}_{t-1})]), \tag{24}

where is the context vector defined as a weighted sum of the encoder’s hidden states, and is the embedding of a previously decoded keyword.

After obtaining the state vector , the decoder samples from the generation distribution and generates a keyword :

\tilde{k}_t \sim P(\tilde{k}_t | \tilde{k}_1, \tilde{k}_2, \dots, \tilde{k}_{t-1}, ct_t) = softmax(\mathbf{w} \cdot \mathbf{s}_t). \tag{25}

forms a sequence of generated keywords.

Zhou et al. (2019) proposed a keyword generator based on Seq2Seq learning to generate domain-specific keywords through estimating the probability , where and are the corresponding domain categories of K and , respectively. In addition, a reinforcement learning algorithm was developed to strengthen the domain constraint in the generation process. The Seq2Seq-based method could generate diverse, relevant keywords within the domain constraint.

However, statistical learning methods have some limitations, such as requiring a set of labelled keywords and the low efficiency in online computation.

3.3 Features used for Keyword Generation

Section titled “3.3 Features used for Keyword Generation”In the literature, keyword generation methods have been proposed on the basis of five major information sources to extract keywords and relationships among them, which are described as follows.

(1) Websites and Web pages: The Web has become a vital place for firms to post advertisements and other commercial information (Thomaidou and Vazirgiannis, 2011). In the meanwhile, the richness of information sources on the Web entitles advertisers to build a domain-specific keyword pool. In

particular, websites and Web pages can be used as a corpus of the source text to extract relevant keywords of interest for their online advertising campaigns. In this branch of keyword generation methods, meta-tags of Web pages are used as an important information feature. The meta-tag crawler sends one or more seed keywords to search engines and extracts a set of meta-tag keywords from Web pages in the organic list. Several popular online advertising tools (e.g., WordStream and Wordtracker) employ meta-tag crawlers to obtain a pool of meta-tag keywords and then based on it suggest relevant keywords for advertisers.

- (2) Search users’ query logs: User’s query logs with search engines timely reflect their intents (Da et al., 2011), which are significantly valuable for commercial communications and advertising. This stream of keyword generation primarily utilizes statistical information of co-occurrence relationships among keywords mining from historical query logs.

- (3) Search results snippets: One or several seed keywords are sent to search engines and resulting search result snippets are used to generate relevant keywords.

- (4) Advertisement databases and advertisers’ bidding data: Search advertisement databases and advertising logs such as bidding data are taken as inputs to obtain relevant keywords.

- (5) Domain semantics and concept hierarchy: Keyword generation methods relying on query logs mining generally ignore the semantic similarity between keywords, thus fail to suggest keywords that don’t explicitly contain seed keywords or have less co-occurrence with but are semantically related to seed keywords. To this end, the fifth category of keyword generation primarily focuses on the expansion of the keyword scope by taking advantage of conceptual hierarchies built manually or extracted either from vocabulary dictionaries/corpus (e.g., thesaurus dictionary, Wikipedia) or constructed by domain experts.

In the following, we explore features used in prior research in the five streams. Tables 4a-4e summarize input/features used in keyword generation in five research streams.

In the literature, a variety of features are used to represent keywords, which have great contributions to the effectiveness of keyword generation solutions. In keyword generation from websites and Web pages, from Table 4a, it is apparent that information retrieval oriented features are most widely used in keyword extraction from websites and Web pages. As reported by Yih et al. (2006), information retrieval oriented features and query log features are helpful for keyword generation, while linguistic features don’t seem to work. Consequently, Berlt et al. (2011) adopted features extracted from the ad collection and GM et al. (2011) took the hierarchic URL tokens as features, while omitting linguistic features. However, Li et al. (2007) found that features of language patterns can help keyword generation, and Zhou et al. (2007) inserted features such as title and keyword importance into meta keywords vector to improve keyword generation. In addition to features from Web pages as in Yih et al. (2006), Wu and Bolivar (2008) explored features from the view of retailers (e.g., eBay). In keyword generation for video advertising, Lee et al. (2009) advocated features reflecting the targeted scene situation.

Table 4a. Input/Features for Keyword Generation from Websites and Web Pages

| Features | |||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L | C | H | M | T | U | I | R | S | L | Q | R | N | H | C | C | T | L | S | |||||||||

| Refs. | F | A | Y | F | I | R | R | L | D | C | L | E | R | 1 | O | I | D | P | F | ||||||||

| L | F | L | P | F | C | D | |||||||||||||||||||||

| Yih et al. | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | ||||||||||||||||

| (2006) | |||||||||||||||||||||||||||

| Wu & | √ | √ | √ | √ | √ | √ | √ | √ | √ | ||||||||||||||||||

| Bolivar | |||||||||||||||||||||||||||

| (2008) | |||||||||||||||||||||||||||

| Berlt et al. | √ | √ | √ | √ | √ | √ | √ | √ | √ | √ | |||||||||||||||||

| (2011) | |||||||||||||||||||||||||||

| GM et al. | √ | √ | |||||||||||||||||||||||||

| (2011) | |||||||||||||||||||||||||||

| Zhou et al. | √ | √ | √ | √ | √ | √ | |||||||||||||||||||||

| (2007) | |||||||||||||||||||||||||||

| Li et al. | √ | ||||||||||||||||||||||||||

| (2007) | |||||||||||||||||||||||||||

| Lee et al. | √ | √ | √ | √ | √ | √ | √ | ||||||||||||||||||||

| (2009) |

Note: LF=Linguistic Features; CA=Capitalization; HY=Hypertext; MF=Meta related Features (e.g., meta section, meta keywords, meta description); TI=Title; URL=Uniform Resource Locator; IRF=Information Retrieval Oriented Features (e.g., TF, IDF, TF history, log value of TF and DF); RL=Relative Location (e.g., wordRatio, sentenceRatio, wordDocRatio); SDL=Sentence and Document Length; LCP=Length of the Candidate Phrase; QLF=Query Log Features (e.g., whether the word appears in the query log files as the first/interior/last word of a query keyword, whether the word never appears in any query log); RE=Root Entropy; NRC=The Number of Root Categories; H1=the Highest Section Level; CO=Co-occurrence; CID=Class ID (i.e., the category of Web pages or advertisements such as sports); TD=Text Descriptions (i.e., a detail description to the product, company or related matter of Web pages or advertisements); LP=Language Pattern; SF=Situation Features.

In online advertising, clicks demonstrate a strong relationship between queries and URLs. This makes query logs valuable information for keyword generation (Bartz et al., 2006; Fuxman et al., 2008), as illustrated in Table 4b. Meanwhile, joint search demand and keyword search demand are informative and helpful in keyword expansion and competitive strategy development (Qiao et al., 2017). Moreover, semantic and domain-specific information entitles to generate keywords that aren’t present in the corpus (Zhou et al., 2019). In addition, online store’s internal search is another source to extract keywords relevant to consumer behaviors (Scholz et al., 2019).

Table 4b. Input/Features for Keyword Generation from Query Logs

| Features | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Refs. | SL | URL | CL | SD | KO | HT | SF | DSF | NIS | |||||||