Keyword Level Bayesian Online Bid Optimization For Sponsored Search Advertising

Abstract

Section titled “Abstract”Bid price optimization in online advertising is a challenging task due to its high uncertainty. In this paper, we propose a bid price optimization algorithm focused on keyword-level bidding for pay-per-click sponsored search ads, which is a realistic setting for many firms. There are three characteristics of this setting: “The setting targets the optimization of bids for each keyword in pay-per-click sponsored search advertising”, “The only information available to advertisers is the number of impressions, clicks, conversions, and advertising cost for each keyword”, and “Advertisers bid daily and set monthly budgets on a campaign basis”. Our algorithm first predicts the performance of keywords as a distribution by modeling the relationship between ad metrics through a Bayesian network and performing Bayesian inference. Then, it outputs the bid price by means of a bandit algorithm and online optimization. This approach enables online optimization that considers uncertainty from the limited information available to advertisers. We conducted simulations using real data and confirmed the effectiveness of the proposed method for both open-source data and data provided by negocia, Inc., which provides an automated Internet advertising management system.

Keywords Online advertising · Bid price optimization · Bayesian inference · Bandit algorithm · Online optimization

1 Introduction

Section titled “1 Introduction”With advances in information technology, the Internet advertising market has continued to grow year after year. Internet advertising revenue in the USA in 2021 was 189 billion USD, which is 2.9 times greater than TV ad spending in the same year [1, 2]. Against this background, Internet advertising has become indispensable for corporate promotion, regardless of the size of the company.

In this paper, we propose a machine learning method that automatically determines some of the configuration items in advertising operations from the perspective of an advertiser (a company placing Internet advertisements). Our focus point is the difficulty advertisers face in managing advertisements. To effectively distribute advertisements, a great deal of information in addition to user responses is necessary, including detailed attribute information on users who have viewed advertisements and information on competing advertisers. However, most of this information is not disclosed to advertisers, even though it is owned by the advertising platform. Advertisers thus need to make decisions based only on limited information, and many of them currently outsource their ad operations to agencies as a result. Our objective is to offer a machine learning method that automatically determines some delivery settings using only the information available to the advertiser, thereby supporting efficient operation of Internet advertising by the advertiser alone, independent of an agency.

We focus on determining bid prices for sponsored search ads, particularly Amazon ads. Sponsored search ads are ads that appear on search results screens in conjunction with the search terms entered, and as of 2021, they accounted for 41.4% of U.S. Internet advertising revenue (78.3 USD) [2]. The bid price is the maximum amount an advertiser can pay for one click on an ad. This parameter is crucial because it directly affects the cost of advertising and the sales generated via advertising. Note that while many studies on bid price optimization in sponsored search have utilized the search rank of the advertisement for optimization, the Amazon advertisements covered in this paper do not use search rank because it is not possible to obtain it due to its terms of use. We therefore propose a method for automatically determining bid prices for sponsored search ads using the limited information available to advertisers.

The key contributions of this work are as follows:

- We mathematically modeled the bid optimization in a realistic setting consisting of three characteristics: “The setting targets the optimization of bids for each keyword in pay-per-click sponsored search advertising”, “The only information available to advertisers is the number of impressions, clicks, conversions, and advertising cost for each keyword”, and “Advertisers bid daily and set monthly budgets on a campaign basis”. This setting was proposed in a joint research project with negocia, Inc., which provides an automated Internet advertising management system, and is a common situation faced by many companies. To our best knowledge, there have been no prior studies on bid optimization in similar settings.

- We proposed Bayesian AdComB, an algorithm that optimizes the bid price from the limited information available to advertisers. The algorithm models the relationships among advertising metrics by constructing a Bayesian network and then implements Bayesian inference to estimate the distribution of those metrics, tak-ing into account the uncertainty of Internet advertising. The bid price optimization is then performed online with the aid of a bandit algorithm, which is designed to maximize the cumulative long-term reward in situations where estimation and optimization must be done sequentially. This bid price optimization problem can be solved by reformulating it as an integer programming problem.

• We ran simulations using real data from negocia, Inc. and the iPinYou dataset [3], which is an open dataset. The results demonstrated not only the effectiveness of our proposed method but also the flexibility and extensibility of the framework.

Section 2 of this paper describes the problem setting of bid amount optimization, and Section 3 introduces related work. In Section 4, we explain the proposed method, and in Section 5, we present the numerical experiments we conducted to verify the effectiveness of our method. We conclude in Section 6 with a brief summary and mention of future work.

2 Problem Setting

Section titled “2 Problem Setting”2.1 Bid Optimization for Sponsored Search Ads

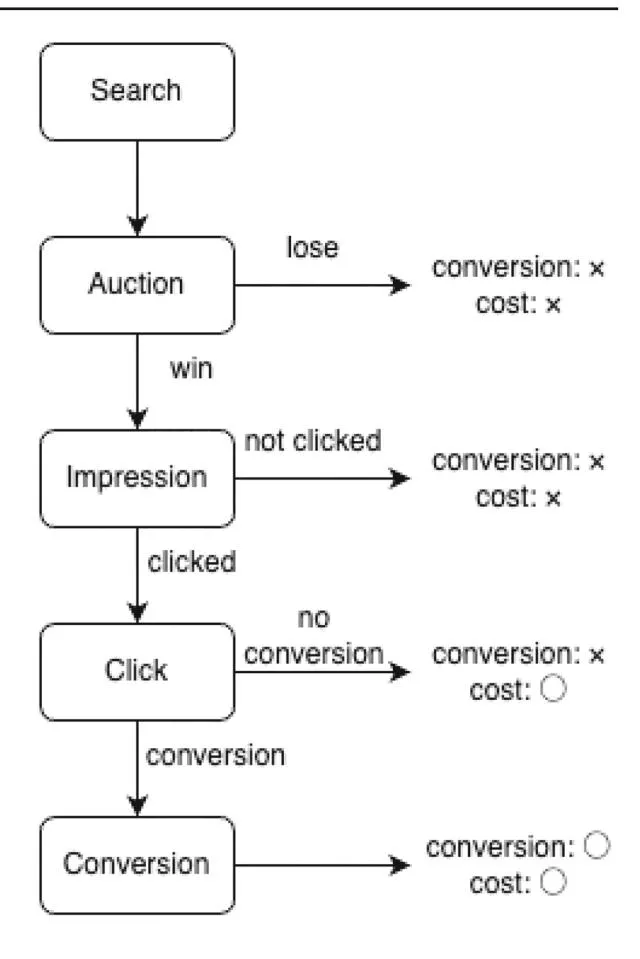

Section titled “2.1 Bid Optimization for Sponsored Search Ads”The focus of this work is bid price optimization at the keyword level for pay-per-click sponsored search advertising. Sponsored search advertising generates sales through a five-step process: search, auction, impression, click, and conversion, as shown in Fig. 1. Advertisers using sponsored search ads register multiple phrases called keywords for each advertisement. When a user performs a search, an auction is held among advertisers whose keywords match the search phrase, and the winning advertisement is displayed to the user (impression). This auction is typically a second-price auction, in which the price paid is the bid of the second-place bidder. On advertising platforms such as Google Ads and Amazon Ads, the results of this auction are affected not only by the bid price for specific keywords but also by an undisclosed score calculated by the advertising platform [4, 5]. In pay-per-click advertising, advertising costs are incurred only when a user clicks on the ad. After a click, the advertiser seeks to generate a conversion (e.g., purchase of a product).

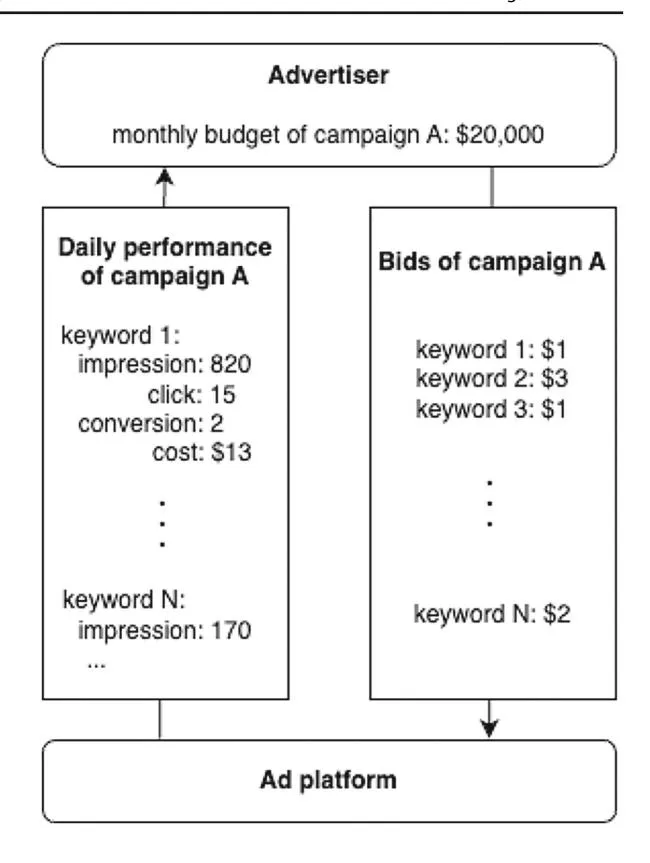

The interaction between advertisers and ad platforms is shown in Fig. 2. Advertisers manage multiple keywords in a unit called a campaign, and bid prices are determined daily for all keywords in the campaign. Note that advertisers cannot change their bid prices during the course of the day. Advertisers receive daily feedback from the ad platform, including the number of impressions, clicks, and conversions as well as the advertising cost for each keyword. However, some information is not available, such as the number of times the keyword was searched, the bid prices of other advertisers, and ad positions. Also, advertisers set budgets for the entire campaign but cannot set budgets for individual keywords. This budget is set over a longer span of time than the bid, e.g., on a monthly basis. If the budgeted amount runs out in the middle of the period, ads will not be displayed unless the budget is increased. It is extremely important for advertisers to avoid situations like this, as it represents a serious opportunity loss.

Fig. 1 Flow of a user’s search through a search engine and conversion through sponsored search advertising

Overall, the setting we examine is characterized by the following three main features:

- Bid price optimization is targeted at the keyword level for pay-per-click sponsored search ads

-

- The only information available to advertisers is the number of impressions, clicks, and conversions and the advertising cost for each keyword

-

- Advertisers determine bid prices daily and set monthly budgets for the entire campaign

This setting was proposed in a joint research project with negocia, Inc., which provides an automated Internet advertising management system.

2.2 Problem Formulation

Section titled “2.2 Problem Formulation”The bid price optimization in our setting can be formulated as follows. Let N be the total number of keywords, T the total number of bidding rounds, and the bid price

Fig. 2 Interaction between advertisers and ad platforms

for keyword i in period t. b, are the minimum and maximum bid prices that can be set, and , are the ad cost and conversion if we set a bid price of b for keyword i in period t. Also, we define for . The advertiser’s goal is to maximize the total number of conversions with an advertising cost below B.

subject to

(1)

The objective function of optimization problem (1) shows the total number of conversions throughout all rounds, and the first constraint shows the constraint that the total ad cost throughout all rounds is within the budget B. The second constraint shows that there is a fixed range that can be set for bid prices for all keywords in all rounds. In this formulation, ad cost and the number of conversions for each keyword

are expressed as a function of bid price, ci(b), ui(b), but these functions are unknown and must be predicted from the previous data.

2.3 Challenges

Section titled “2.3 Challenges”There are two main challenges with bid price optimization in the problem setting discussed Section 2.1: high uncertainty and the need for sequential forecasting and optimization. The uncertainty is caused by three main factors. The first factor is the scarcity of positive example data. In pay-per-click advertising, neither conversions nor advertising costs occur unless an ad is clicked on. However, click-through rates for Internet advertising are very low: according to a survey, the average click-through rates are 3.17% for sponsored search ads on Google and 0.46% for display ads [6]. Therefore, some keywords have very few (or no) clicks, making the prediction of conversions and advertising cost inaccurate. The second factor is the paucity of data available to advertisers. To optimize bid prices, information such as competitors’ bids, the demographics of the ad’s viewers, and the location of the ad is very important, but such information is often not available to advertisers. Therefore, advertisers must determine bid prices from limited information such as daily ad impressions, clicks, conversions, and advertising cost, which makes accurate forecasting difficult. The third factor is uncertainty derived from human behavior. User behavior such as clicks and conversions is difficult to predict because it is related to various factors such as user demographics, personality, and time. This uncertainty creates the need for sequential estimation and optimization. Bid price optimization requires knowing the conversions, advertising cost, and other advertising metrics for all possible bid price candidates, but the aforementioned uncertainties make it difficult to make predictions with sufficient accuracy. Therefore, an approach that optimizes the bid price once and fixes it thereafter is not likely to be the optimal strategy, and sequential estimation and optimization are required each time the information is updated.

3 Related Work

Section titled “3 Related Work”Bid price optimization for Internet advertising has been studied under various conditions, including different types of ads, bidding styles, and constraints.

Examples of studies on bid price optimization for sponsored search advertising include works by [7–11]. Feng et al. [7] formulated the bid price optimization problem as an adversarial bandit problem and proposed an algorithm based on Exponentialweight policy for Exploration and Exploitation (Exp3) [12] to optimize bid prices for sponsored search advertising. Their algorithm focuses on optimizing a single bid price without considering budget constraints. Abhishek et al. [8] optimize bids by creating a model that predicts the number of clicks and ad costs based on multiple assumptions. Thomaidou et al. [9] proposed a comprehensive operational framework for sponsored search that includes bid price optimization and automatic ad text generation. Zhang et al. [10] approximate and optimize the bid price optimization problem as a sequential quadratic programming problem. However, the methods proposed in these papers

require information that is difficult for advertisers to obtain, such as the location of the ads on the search result page. Uncertainty in sponsored search advertising is a challenge in contexts other than bid price optimization. Rusmevichientong et al. [13] proposed a method to prioritize keywords for bidding with uncertainty, and [14, 15] optimize the budget allocation for sponsored search advertising with uncertainty on the basis of investment portfolio theory.

Avadhanula et al. [16] and Nuara et al. [17] examined bid price optimization for multiple platforms. Avadhanula et al. [16] provided a multi-platform bid price optimization algorithm based on a method called stochastic bandits with knapsacks [18]. Their algorithm is designed for a setting with a high frequency of bid price decisions, such as real-time bidding for display advertising, and thus is not suitable for the setting of this paper, where bid prices can be changed only once a day at most. Nuara et al. [17] formulated a bid price/daily budget optimization problem for multiple platforms as a combinatorial semi-bandit problem [19] and provided an algorithm for simultaneous optimization. Their algorithm is based on the Gaussian process upper confidence bound (GP-UCB) [20], which predicts conversions and advertising costs for each bid price candidate and then optimizes bid prices using dynamic programming. Although their algorithm targets bid price optimization for multiple platforms, it can be extended to our setting, namely, multiple keywords on a single platform. However, we cannot directly apply their algorithm because it is not possible to control advertising costs by setting individual daily budgets in our setting.

Studies that have applied Bayesian inference to the field of Internet advertising include works by [21-26]. Hou et al. [24] have made ROI predictions for a portfolio consisting of multiple keywords in sponsored search advertising. They modeled the relationship between bid prices, clicks, conversions, and other advertising metrics using Bayesian networks, which is an approach similar to the Bayesian modeling utilized in this study. However, their study aimed to predict the ROI of advertising and did not optimize bid prices. Agarwal et al. [21], Du et al. [22], Ghose and Yang [23] and Yang and Ghose [26] discuss the relationship between coefficients by linearly modeling the relationship between the indicators and examining the posterior distribution of the coefficients. Yang et al. [25] focus on the hierarchical structure that exists among ads, platforms, and users and utilize Hierarchical Bayes to estimate click rates for specific ads, platforms, and users.

4 Method

Section titled “4 Method”Our proposed method, Bayesian advertising combinatorial bandit (Bayesian AdComB), consists of three subroutines inspired by the method of Nuara et al. [17]. The flow of the proposed method is shown in Algorithm 1.

The algorithm first updates the probability model for each keyword using the advertising metrics obtained in the previous round by update subroutine (line 5). We model the relationships among advertising metrics by a Bayesian network and derive the posterior distribution by Bayesian inference. Next, exploration subroutine (line 6) selects the functions and for optimization from the model

Algorithm 1 Bayesian AdComB

Section titled “Algorithm 1 Bayesian AdComB”Require: set \mathcal{B} of bid price, prior distribution \{\mathcal{M}_i^{(0)}\}_{i=1}^N, total budget B, total round T1: \bar{R} \leftarrow R2: for t \in \{1, ..., T\} do3: if \bar{B} > 0 then4: for i \in \{1, ..., N\} do \mathcal{M}_{i}^{(t)} \leftarrow \mathbf{Update}\left(\mathcal{M}_{i}^{(t-1)}, \left\{\mathrm{imp}_{i}^{\tau}, \mathrm{click}_{i}^{\tau}, \mathrm{cv}_{i}^{\tau}, \mathrm{cost}_{i}^{\tau}\right\}_{\tau=1}^{t-1}\right)5: ▶ Update subroutine (\hat{u}_i^t(b), \hat{c}_i^t(b)) \leftarrow \text{Exploration}(\mathcal{M}_i^{(t)}, \mathcal{B})6: ⊳ Exploration subroutine7. \{\hat{b}_i^t\}_{i=1}^N \leftarrow \text{Optimize}\left(\left\{\left(\hat{u}_i^t(b), \hat{c}_i^t(b)\right)\right\}_{i=1}^N, \mathcal{B}, \frac{\bar{B}}{T-t+1}\right)8:

▷ Optimization subroutine

bid with \{\hat{b}_i^t\}_{i=1}^N9: \bar{B} \leftarrow \bar{B} - \sum_{i=1}^{N} \operatorname{cost}_{i}^{t}10:11:12: break13: end if14: end fordistribution. We utilize a bandit algorithm for this selection. Finally, optimization subroutine (line 8) performs optimization using the function selected in the exploration subroutine. We reformulate Eq. (1) as an integer programming problem and optimize it using a general-purpose integer programming solver. Each subroutine is described in detail in the following subsections.

4.1 Update Subroutine

Section titled “4.1 Update Subroutine”The purpose of this subroutine is to estimate the distribution of conversions and advertising costs for each bid price candidate. Estimating these factors as a distribution rather than a point enables estimation that accounts for the high uncertainty. Our method constructs a Bayesian network to model the relationship between the bid price, the number of impressions, clicks, and conversions and the advertising costs, which are the advertising metrics available to advertisers. We then use Bayesian inference to obtain the posterior distribution.

For the probability model (prior distribution, likelihood function) in the generation process of the advertising metrics, if the log data accumulated so far exists, WAIC [27] in the log data is used as a criterion to select one of several candidates. In this paper, we propose four likelihood functions and two prior distributions that we consider reasonable. Although it is difficult to claim that a stochastic model is valid, the goodness of fit of a stochastic model to log data can be quantitatively evaluated by calculating WAIC in log data for any possible stochastic model. Therefore, it is possible to quantitatively compare stochastic models that are not included in this paper and select the model to be utilized. See Section 5.3.1 for the results of the WAIC comparison on the log data of the stochastic model proposed in this paper.

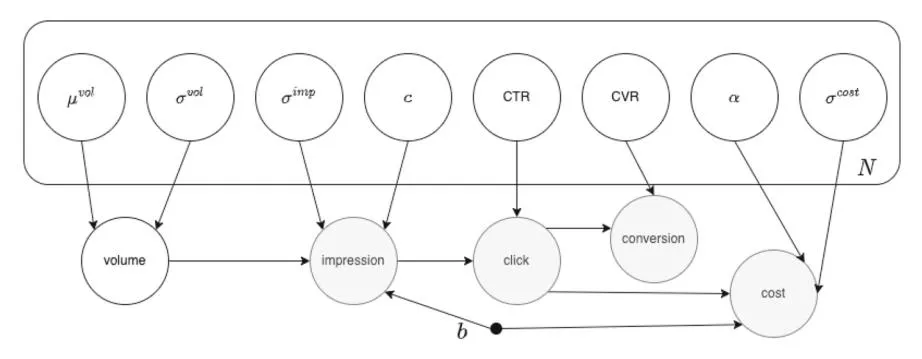

We consider four likelihood functions: (1) expressed by Fig. 3 and Eq. (2), (2) expressed by Fig. 4 and Eq. (3), (3) expressed by Fig. 5 and Eq. (4), and (4) expressed by Fig. 6 and Eq. (5). Note that these likelihood functions indicate the likelihood function

Fig. 3 Bayesian network with likelihood function 1

for the keyword i, where represents the normal distribution with mean and standard deviation , and Binomial(n, p) represents the binomial distribution with number of trials n and probability p.

\begin{aligned} \text{volume}_{i} &\sim \mathcal{N}\left(\mu_{i}^{\text{vol}}, \sigma_{i}^{\text{vol}^{2}}\right) \\ \text{imp}_{i} &\sim \mathcal{N}\left(\frac{b_{i}^{2}}{b_{i}^{2} + c_{i}^{2}} \cdot \text{volume}_{i}, \sigma_{i}^{\text{imp}^{2}}\right) \\ \text{click}_{i} &\sim \text{Binomial (imp}_{i}, \text{CTR}_{i}) \\ \text{cv}_{i} &\sim \text{Binomial (click}_{i}, \text{CVR}_{i}) \\ \text{cost}_{i} &\sim \mathcal{N}\left(\alpha_{i} \cdot b_{i} \cdot \text{click}_{i}, \sigma_{i}^{\text{cost}^{2}}\right). \end{aligned} \tag{2}