Networked Stochastic Multi Armed Bandits With Combinatorial Strategies

Abstract**—In this paper, we investigate a largely extended version of classical MAB problem, called** networked combinatorial bandit problems**. In particular, we consider the setting of a decision maker over a networked bandits as follows: each time a combinatorial strategy, e.g., a group of arms, is chosen, and the decision maker receives a reward resulting from her strategy and also receives a** side bonus resulting from that strategy for each arm’s neighbor. This is motivated by many real applications such as on-line social networks where friends can provide their feedback on shared content, therefore if we promote a product to a user, we can also collect feedback from her friends on that product. To this end, we consider two types of side bonus in this study: side observation and side reward**. Upon the number of arms pulled at each time slot, we study two cases:** single-play and combinatorial-play**. Consequently, this leaves us four scenarios to investigate in the presence of side bonus: Single-play with Side Observation, Combinatorial-play with Side Observation, Single-play with Side Reward, and Combinatorial-play with Side Reward. For each case, we present and analyze a series of** zero regret polices where the expect of regret over time approaches zero as time goes to infinity. Extensive simulations validate the effectiveness of our results.

I. INTRODUCTION

Section titled “I. INTRODUCTION”A multi-armed bandits problem (MAB) problem is a basic sequential decision making problem defined by a set of strategies. At each decision epoch, a decision maker selects a strategy that involves a combination of random bandits or variables, and then obtains an observable reward. The decision maker learns to maximize the total reward obtained in a sequence of decisions through history observation. MAB problems naturally capture the fundamental tradeoff between exploration and exploitation in sequential experiments. That is, the decision maker must exploit strategies that did well in the past on one hand, and explore strategies that might have higher gain on the other hand. MAB problems now play an important role in online computation under unknown environment, such as pricing and bidding in electronic commerce [?], [?], Ad placement on web pages [?], source routing in dynamic networks [?], and opportunistic channel accessing in cognitive radio networks [?], [?]. In this paper, we investigate a largely extended version of classical MAB problem, called networked combinatorial bandit problems. In particular, we consider the setting of a decision maker over a networked bandits as follows: each time a combinatorial strategy, e.g., a group of arms, is chosen, and the decision maker receives a direct reward resulting from her strategy and also receives a

side bonus (either observation or reward) resulting from that strategy for each arm’s neighbors.

In this study, we take as input a relation graph G that represents the correlation among K arms. In the standard setting, pulling an arm i gets reward and observation Xi,t, while in the networked combinatorial bandit problem with side bonus, one also gets side observation or even reward due to the similarity or potential influence among neighboring arms. We consider two types of side bonus in this work: (1) Side-observation: by pulling arm i at time t one gains the direct reward associated with i and also observes the reward of her neighboring arms. Such side-observation [?] is made possible in settings of on-line social networks where friends can provide their feedback on shared content, therefore if we promote a product to a user, we can also collect feedback from her friends on that product; (2) Side-reward: in many practical applications such as recommendation in social networks, pulling an arm i not only yields side observation on neighbors, but also receives extra rewards. That is by pulling arm i one gains the reward associated with i together with her neighboring arms directly. This setting is motivated by the observation that users are usually influenced by her friends when making purchasing decisions. [?].

Despite of many existing results on MAB problems against unknown stochastic environment [?], [?], [?], [?], [?], their adopted formulations do not fit those applications that involve either side bonus or exponentially large number of candidate strategies. There are several challenges facing our new study. First of all, under combinatorial setting, the number of candidate strategies could be exponentially large, if one simply treats each strategy as an arm, the resulting regret bound is exponential in the number of variables or arms. Traditional MAB assumes that all the arms are independent, which is inappropriate in our setting. In the presence of side bonus, how to appropriately leverage additional information in order to gain higher rewards is another challenge. To this end, we explore a more general formulation for networked combinatorial bandit problems under four scenarios, namely, single/combinatorial play with side observation, single/combinatorial play with side reward. The objective is to minimize the upper bound of regret (or maximize the total reward) over time.

The contributions of this paper are listed as follows:

• For Single-play with Side Observation case, we present the first distribution-free learning (DFL) policy, whose time and space complexity are bounded by O(K). Our policy achieves zero regret that does not depend on , the minimum distance between the best static strategy and any other strategy.

- For Combinatorial-play with Side Observation case, we present a learning policy with zero regret. Compared with traditional MAB problem without side bonus, we reduce the regret bound significantly.

- For Single-play with Side Rewards case, we develop a distribution-free zero regret learning policy. We theoretically show that this scheme converges faster than any existing method.

- For Combinatorial-play with Side Rewards case, by assuming that the combinatorial problem at each decision point can be solved optimally, we present the first distribution-free zero regret policy.

We evaluate our proposed learning policy through extensive simulations and simulation results validate the effectiveness of our schemes.

The remainder of this paper is organized as follows. We first give a formal description of networked combinatorial multi-armed bandits problem in Section II. We study Single-play with Side Observation case in Section III. In Section IV, we study Combinatorial-play with Side Observation case. Single-play with Side Rewards case has been discussed in Section V. In Section VI, we study Combinatorial-play with Side Rewards case. We evaluate our policies via extensive simulations in Section VII. We review related works in Section VIII. We conclude this paper, and discuss limitations as well as future works in Section IX. Most notations used in this paper are summarized in Table I.

II. MODELS AND PROBLEM FORMULATION

Section titled “II. MODELS AND PROBLEM FORMULATION”In the standard MAB problem, a K-armed bandit problem is defined by K distributions , each arm with respective means . When the decision maker pulls arm i at time t, she receives a reward . We assume all rewards are independent, and all have support in [0,1]. Let i=1 denote the optimal arm, and be the difference between the best arm and arm i.

The relation graph G=(V,E) over the K arms describes the correlations among them, where an undirected link indicates the correlation between two neighboring arms i and j. In the standard setting, pulling an arm i gets reward and observation , while in the networked combinatorial bandit problem with side bonus, one also gets side observation or even reward from neighboring arms due to the similarity or potential influence among them. Let N(i) denote the set of neighboring arms of arm i and . In this work, we consider two types of side bonus:

• Side observation: by pulling arm i at time t one gains the reward associated with i and also observes the reward of i’s neighboring arm . This is motivated by many real applications, for example, in today’s online social network, friends can provide their

- feedback on shared content, therefore if we promote a product to one user, we can also collect feedback from her friends on that product;

- Side reward: by pulling an arm i not only yields side observation on neighbors, but also receives rewards from them, i.e., the total rewards would be . This setting is motivated by the observation that in many practical applications such as recommendation in social networks, users are usually influenced by her friends when making purchasing decisions.

Upon the number of arms pulled at each time slot, we will study single-play case and combinatorial-play case.

- In the single-play case, the decision maker selects one arm at each time slot, e.g., traditional MAB problem belongs to this category;

- In the combinatorial-play case, the decision maker requires to select a combination of arms that satisfies given constraints. One such example is online advertising, assume an advertiser can only place up to m advertisements on his website, he repeatedly selects a set of m advertisements, observes the click-through-rate, with the goal of maximizing the average click-throughrate. This problem can be formulated as a combinatorial MAB problem where each arm represents one advertisement, subject to the constraint that one can play at most m arms at each time slot. In the combinatorial case, at each time slot t, an M-dimensional strategy vector is selected under some policy from the feasible strategy set F. By feasible we mean that each strategy satisfies the underlying constraints imposed to F. We use to index strategies of feasible set F in the decreasing order of average reward , e.g., has the largest average reward. Note that a strategy may consist of less than M random variables, as long as it satisfies the given constraints. We then set i = 0 for any empty entry i.

In either case, the objective is to minimize long-term regret after n time slots, defined by cumulative difference between the received reward and the optimal reward.

Consequently, this leaves us four scenarios to investigate: Single-play with Side Observation, Combinatorial-play with Side Observation, Single-play with Side Reward, and Combinatorial-play with Side Reward. We then describe the problem formulation for each case. We use to denote index of selected arm (resp. strategy) by the decision maker at time slot t, and subscript 1 to denote the optimal arm (resp. strategy) in the four cases. We evaluate policies using regret, , which is defined as the difference in the total expected reward (over n rounds) between always playing the optimal strategy and playing arms according to the policy. We say a policy achieves zero regret if the expected average regret over time approaches zero as time goes to infinity, i.e., as .

- Single-play with Side Observation (SSO). In this case, the decision maker pulls an arm i, observes all , , and gets a reward . The regret by time slot n is written

TABLE I SUMMARY OF NOTATIONS

Section titled “TABLE I SUMMARY OF NOTATIONS”| \begin{array}{c ccccccccccccccccccccccccccccccccccc | ||

|---|---|---|

| \begin{array}{cccccccccccccccccccccccccccccccccccc | Ü | |

| \begin{array}{cccccccccccccccccccccccccccccccccccc | K | number of arms |

| \begin{array}{cccccccccccccccccccccccccccccccccccc | M | number of selected arms |

| \begin{array}{c} \mu_i \\ N_i \\ N_i \\ \Delta_i \\ B_{i,t} \\ O_{i,t} \\ O_{i,t} \\ M \\ \hline X_{i,t} \\ \hline X_{i,t} \\ H \\ C \\ C \\ C \\ F \\ R_{x,t} \\ G_x \\ Y_x \\ N \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C | G | relation graph over the arms |

| \begin{array}{c} \mu_i \\ N_i \\ N_i \\ \Delta_i \\ B_{i,t} \\ O_{i,t} \\ O_{i,t} \\ M \\ \hline X_{i,t} \\ \hline X_{i,t} \\ H \\ C \\ C \\ C \\ F \\ R_{x,t} \\ G_x \\ Y_x \\ N \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C | observation/direct reward on arm i at time t | |

| \begin{array}{c} N_i \\ \Delta_i \\ B_{i,t} \\ O_{i,t} \\ O_{i,t} \\ \overline{X}_{i,t} \\ H \\ C \\ C \\ F \\ R_{x,t} \\ S \\ X_x \\ Y_x \\ N \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C \\ C | mean of | |

| \begin{array}{c ccccccccccccccccccccccccccccccccccc | set of neighboring arms of arm i | |

| \begin{array}{c ccccccccccccccccccccccccccccccccccc | the distance between the best strategy and strategy | |

| side reward received by arm from | ||

| number of observation times on arm by time | ||

| number of update times on side rewards of arm by time | ||

| time averaged value of observation on arm by time | ||

| H | vertex-induced subgraph of G composed by arms with | |

| \begin{array}{cccccccccccccccccccccccccccccccccccc | ||

| \begin{array}{cccccccccccccccccccccccccccccccccccc | F | feasible strategy (arm or com-arm) set |

| set of neighboring arms of component arms in com-arm maximum of among all com-arms combinatorial side reward received by com-arm from the distance between the best strategy and strategy | ||

| maximum of among all com-arms combinatorial side reward received by com-arm from the distance between the best strategy and strategy | mean of | |

| combinatorial side reward received by com-arm from the distance between the best strategy and strategy | set of neighboring arms of component arms in com-arm | |

| the distance between the best strategy and strategy | N | maximum of among all com-arms |

| 6, 6, | combinatorial side reward received by com-arm from | |

| A 11 | the distance between the best strategy and strategy | |

| minimum of among all strategies | minimum of among all strategies |

as,

(1)

Here denotes the index of arm played at t.

- Combinatorial-play with Side Observation (CSO). Rather than pulling a single arm, the decision maker pulls a set of arms, , receives a reward

and also observes reward for each neighboring arm , where is the set of neighboring arms for selected strategy . Therefore, let denote the expected reward from the optimal strategy, the regret is defined as

(2)

- Single-play with Side Rewards (SSR). When pulling an arm i, it yields a total reward

Therefore, the best arm shall be the one with the maximum expected total reward. Let denote the mean of reward for arm i, and the maximum reward. The regret is

(3)

Note here, the optimal arm may differ from the optimal arm under single-play with side observation.

- Combinatorial-play Side Rewards (CSR). Different from combinatorial-play with side observation, the decision maker directly obtains the rewards from all neighboring arms. That is, the totally received reward includes direct reward by strategy x and side reward by its neighbors. Let be the set of neighboring arms for strategy x, and be the expected reward of . The combinatorial reward at time slot t is written as . We define the regret as

(4)

III. SINGLE-PLAY WITH SIDE OBSERVATION

Section titled “III. SINGLE-PLAY WITH SIDE OBSERVATION”We start with the case of Single-play with Side Observation. In this case, the decision maker learns to select an arm (resp. strategy) with maximum reward, meanwhile observes side information of its neighbors defined in relation graph. Our proposed policy, which is the first distribution free learning policy for SSO reffered to as DFL-SSO, is shown in Algorithm 1. As shown in Line 2-5, the decision maker updates all neighbors’ side information, i.e., number of observation up to current time, and time-averaged reward. The key idea behind the algorithm is that side-observation potentially reduces the regret as the decision maker can explore more without pain, thus gain more history information to exploit.

To theoretically analyze the benefit of side observation, we novelly leverage the technique of graph partition and clique cover. The basic idea in standard analysis of regret bound with side observation in distribution-dependent case is to use clique cover of relation graph, and use the arm with maximum inside each cilque to represent the clique for analysis. While standard proof of distribution-free regret bound is to divide the arms into two sets via a threshold on , and then respectively analyze the bounds of the two sets of arms. Therefore, to obtain a distribution-free result, we cannot directly use the arm with maximum inside a clique for representation to prove distribution-free regret bound, as the arms with smaller than are distributed inside cliques. To address this issue, we first partition the relation graph G using the predefined threshold, and then mainly analyze the benefit of side observation in one vertex-induced subgraph H for arms having above . In the subgraph H, it is then possible to analyze the distribution-free regret bound using the technique of clique cover.

Theorem 1 quantifies the benefit brought about by it, where it shows that the more side observation (e.g., smaller clique number) is, the smaller the upper bound of regret is.

Theorem 1: The expected regret of Algorithm 1 after n time slots is bounded by

\mathcal{R}_n \leq 15.94\sqrt{nK} + 0.74\mathcal{C}\sqrt{n/K},\tag{6}

where C is clique cover of vertex-induced subgraph H with arms of above threshold in relation graph G.

Proof: The proof is based on our novel combination of graph partition and clique cover. We first partition relation

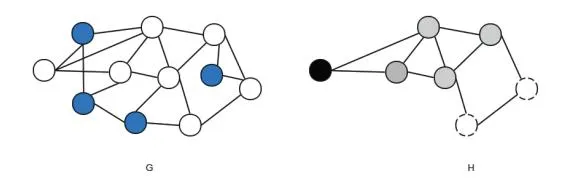

Fig. 1. Graph partition: G is relation graph, and H is vertex-induced graph that is covered by 3 cliques

Algorithm 1 Distribution-Free Learning policy for single-play with side observation (DFL-SSO)

1: For each time slot Select an arm i by maximizing

\overline{X}_{i,t} + \sqrt{\frac{\log\left(t/(KO_{i,t})\right)}{O_{i,t}}} \tag{5}

to pull

- 2: for do

- 3: 4:

- 5: end for

- 6: end for

graph to rewrite regret in terms of cliques, and then mainly tighten the upper bound by analyzing regret of cliques.

1. Partition relation graph and rewrite regret of subgraph H in terms of cliques.

Section titled “1. Partition relation graph and rewrite regret of subgraph H in terms of cliques.”We order the arms in an increasing order of . We use to split the K arms into two disjoint sets, one set with and the other set with (We will set the value of in later analysis). Let be the smallest index of arm satisfying . We remove all arms in from the relation graph G, as well as adjacent edges to nodes in . In this way, we get a subgraph H of G, over arms in . The regret satisfies,

\Re(n) \le n\Delta_{c_0} + \Re_H(n),\tag{7}

where is regret generated by selecting suboptimal arms in .

Consider a clique covering C of H, i.e., a set of cliques such that each is a clique and . We define the clique regret for any by

(8)

Since the set of cliques covers the whole graph H, we have

\mathfrak{R}_{H}(n) \le \sum_{c \in \mathcal{C}} R_{c}(n). \tag{9}

We give an illustration of the partition process in Fig. 1, where the relation graph G contains one small set of blue nodes representing with below , and the other large set of white nodes denoting with above . The vertex-induced subgraph H of is covered by a minimum of 3 cliques, respectively marked by black, gray and dash lines.

2. Regret analysis for regret of subgraph H

Section titled “2. Regret analysis for regret of subgraph H”In the rest part, we focus on proving upper bound of regret . Let , and denote the number of times (any arm in) clique c has been played up to time t, where is the number of times arm i has been selected up to time t. Similarly, we suppose that cliques are ordered in the increasing order of . Let for cliques in , , and . Let and . For better description, we use to denote the case of c = 0.

As every arms in a clique c must be observed for the same number of times, then for each clique and , we have

(5) (10)

Meanwhile,

\mathfrak{R}_H(n) = \sum_{c \in K} \mathfrak{R}_c = \sum_{c \in \mathcal{C}} l_0 \Delta_c + \sum_{i=1}^K \Delta_i T_i'(n), \tag{11}

Where denotes the number of arm i played after , and we refer to the second term as

Define

W = \min_{1 \le t \le n} W_{1,t},\tag{12}

and

U_{j,i} = \mathbf{1}_{W \in [v_{j+1}, v_j)} \Delta_i T_i'(n). \tag{13}

We have the following for ,

(14)

(15)

For the first term of Equation (15), we have:

(16)

We have the first equation as and . To bound the second term of Equation (15), we record

(18)

after . To pull a suboptimal arm i at t, one must have . By Algorithm 1, we have }, since once we have pulled times arm i its index will always be lower than the index of arm 1.

Therefore, we have

+ n \sum_{c=1}^{\mathcal{C}} \mathbf{1}_{W < v_c} (\Delta_c - \Delta_{c-1}). \tag{19}

For any ,

\Delta_{i} \mathbf{E}(\tau_{i} | \tau_{i} > l_{0}) \tag{20}

\tag{21}$$ Let $l_0 = 8 \log \left( \frac{n}{\kappa} \Delta_i^2 \right) / \Delta_i^2$ . For $l \ge l_0$ , we have $$\log_{+}(t/(Kl)) \le \log_{+}(n/(Kl_0)) \le \left(\frac{n}{K} \times \frac{\Delta_i^2}{8}\right) \tag{22}$$ $$\leq \frac{l_0 \Delta_i^2}{8} \leq \frac{l \Delta_i^2}{8}.$$ Therefor, we have $$\frac{\Delta_i}{2} - \sqrt{\frac{\log_+(n/Kl)}{l}} \ge \frac{\Delta_i}{2} - \frac{\Delta_i}{\sqrt{8}} = a\Delta_i \qquad (24)$$ with $a = \frac{1}{2} - \frac{1}{\sqrt{8}}$ , $$\Delta_c l_0 \leq 8 \log\left(\frac{n}{K}\Delta_i^2\right)/\Delta_i \leq \frac{2}{e} \sqrt{n/K}$$ (25) To bound (21) using Hoeffding Bound, i.e., $$\mathbf{E}\{\tau_{i}|t>l_{0}\} \leq \sum_{l=l_{0}}^{+\infty} \mathbf{P}(\overline{X}_{i,l}-\mu_{i}\geq a\Delta_{i}) \qquad (26)$$ $$\leq \sum_{l=l_{0}}^{+\infty} \exp\left(-2l(a\Delta_{i})^{2}\right) \qquad (27)$$ $$= \sum_{l=l_0}^{+\infty} \frac{1 - 2l_0(a\Delta_i)^2}{1 - \exp(-2(a\Delta_i)^2)}$$ (28) $$\leq \frac{1}{1 - \exp(-2(a\Delta_i)^2)} \tag{29}$$ $$\leq \frac{1}{(2a\Delta_i)^2 - (-2(a\Delta_i)^2)} \tag{30}$$ $$= (2a\Delta_i)^2 - (-2(a\Delta_i)^2)$$ $$= \frac{1}{2a\Delta_i^2(1-a^2)}.$$ (31) Then we have $$\Delta_{i} \mathbf{E} \{ \tau_{i} | t > l_{0} \} \leq 8 \log \left( \frac{n}{K} \Delta_{i}^{2} \right) / \Delta_{i} + \frac{1}{2a \Delta_{i} (1 - a^{2})}$$ $$\leq \frac{2}{e} \sqrt{n/K} + \frac{\alpha^{-1}}{2a(1 - a^{2})} \sqrt{n/K}.$$ (32) Now we prove to bound $n \sum_{c=0}^{\mathcal{C}} \mathbf{P}(W \leq v_c) (\Delta_c - \Delta_{c-1})$ . Recall that $\Delta_{c_0} \leq \delta_0 \leq \Delta_{c_0+1}$ , and let $\delta_{c_0}$ be $\Delta_{c=0}$ . Taking $\mathbf{P}(W \leq \mu_1 - \frac{\Delta_c}{2})$ as an nonincreasing function of $\Delta_c$ , we have $$\sum_{c=1}^{C} \mathbf{P}(W \le v_c) (\Delta_c - \Delta_{c-1})$$ $$\le \delta_0 - \Delta_{c_0} + \int_{\delta_0} 1 \mathbf{P}(W \le \mu_1 - \frac{u}{2}) du. \tag{33}$$ For a fixed $u \in [\delta_0, 1]$ and $f(u) = 8 \log(\sqrt{n/K}u)/u^2$ , we have <span id="page-4-1"></span> $$\mathbf{P}(W \le \mu_{1} - \frac{u}{2})$$ $$= \mathbf{P}\left(\exists 1 \le l \le n : \overline{X}_{1,l} + \sqrt{\frac{\log(n/(Kl))}{l}} < \mu_{1} - \frac{u}{2}\right)$$ $$\le \mathbf{P}\left(\exists 1 \le l \le f(u) : \mu_{1} - \overline{X}_{1,l} > \sqrt{\frac{\log(n/(Kl))}{l}}\right)$$ $$+\mathbf{P}\left(\exists 1 \le l \le f(u) : \mu_{1} - \overline{X}_{1,l} > \frac{u}{2}\right)$$ (34) (23) Let $P_1$ denote the first term of (34), using the form of $\frac{1}{2^{m+1}}f(u) \leq l \leq \frac{1}{2^m}f(u)$ , we have $$P_{1} \leq \sum_{m=1}^{\infty} \mathbf{P} \left( \exists \frac{1}{2^{m+1}} f(u) \leq l \leq \frac{1}{2^{m}} f(u) : \\ l(\mu_{1} - \overline{X}_{m,l}) > \sqrt{\frac{f(u)}{2^{m+1}} \log(\frac{n2^{m}}{Kf(u)})} \right) \\ \leq \sum_{m=1}^{\infty} \exp \left( -2 \frac{f(u)2^{-(m+1)} \log(\frac{n2^{m}}{Cf(u)})}{f(u)2^{-m}} \right) \\ = 2 \frac{Kf(u)}{n}$$ (35) Let $P_2$ denote the first term of (34), using the form of (27) $2^m f(u) \le l \le 2^{m+1} f(u)$ , we have similarly, $$P_{2} \leq \sum_{m=1}^{\infty} \mathbf{P} \left( \exists 2^{m} f(u) \leq l \leq 2^{m+1} f(u) : \\ l(\mu_{1} - \overline{X}_{m,l}) > \frac{lu}{2} \right)$$ $$\leq \sum_{m=0}^{\infty} \exp \left( -2 \frac{(2^{m-1} f(u)u)^{2}}{f(u)2^{m+1}} \right)$$ $$\leq \frac{1}{\exp(f(u)u^{2}/4) - 1}$$ $$\leq \frac{1}{nu^{2}/K - 1}$$ (36) The last inequality comes from f(u) is upper bounded by 4n/(eK). By taking integrity on $P_1$ and $P_2$ , we respectively have $$n \int_{\delta_0}^1 P_1 du \leq n \frac{2K}{n} \int_{\delta_0}^1 f(u) du$$ $$= n \frac{2K}{n} \left[ \frac{8 \log(e \sqrt{n/K} u)}{u} \right]_1^{\delta_0}$$ $$\leq \frac{8 \log(e \alpha)}{\alpha} \sqrt{nK},$$ (38) and $$n\int_{\delta_0}^1 P_2 du \le \frac{1}{2} \log \left(\frac{\alpha+1}{\alpha-1}\right) \sqrt{nK}.$$ (39) Instantly we have $$n \sum_{c=0}^{C} \mathbf{P}(W \le v_c) (\Delta_c - \Delta_{c-1})$$ $$\le n(\delta_0 - \Delta_{c_0}) + \left(\frac{8\log(e\alpha)}{\alpha} + \frac{1}{2}\log\left(\frac{\alpha+1}{\alpha-1}\right)\right) \sqrt{nK}$$ Finally, we get the regret bounded by $$\mathcal{R}_{n} \leq \sum_{c \in \mathcal{C}} \frac{2}{e} \sqrt{n/K} + \left(3\alpha + \frac{8\log(e\alpha)}{\alpha} + \frac{1}{2}\log\left(\frac{\alpha+1}{\alpha-1}\right) + \text{set for this problem consists of 7 feasible strategies, i.e., independent sets of arms in } G$$ $$\frac{\alpha^{-1}}{2a(1-a^{2})} \sqrt{nK}$$ $$(40) \qquad \mathbf{s}_{1} = \{1\}, \cup_{i \in \mathbf{s}_{1}} N_{i} = \{1, 2\}$$ Let $\alpha = e$ , and we already have $a = \frac{1}{2} - \frac{1}{\sqrt{8}}$ , then $$\mathcal{R}_n \leq 15.94\sqrt{nK} + 0.74\mathcal{C}\sqrt{n/K}.\tag{41}$$ #### <span id="page-5-0"></span>IV. COMBINATORIAL-PLAY WITH SIDE OBSERVATION In this section, we consider combinatorial-play with side observation. In this case, an intuitively extension is to take each strategy as an arm ( we name it com-arm), and then apply the algorithm for SSO to solve the problem. However, the key question is how to utilize the side-observation on arms defined in relation graph to gain more observation on comarms, that is, how to define neighboring com-arms. To this end, we introduce the concept of strategy relation graph to model the correlation among com-arms, by which we convert the problem of CSO to SSO. The construction process for strategy relation graph is as follows. We define strategy relation graph SG(F, L) for strategies in F, where F is vertex set, and L is edge set. Each strategy $s_x$ is denoted by a vertex, and a link $l = (s_x, s_y)$ in L connects two distinct vertexes $s_x$ and $s_y$ if $s_y \in Y_x$ and vice versa. The neighbor definition for strategies is natural as once a strategy is played, the union of neighbors of arms in this strategy could be observed according to neighbor definition for arms in G, which surely reward of any strategy composed by these observed arms is also observed. We give an example in Fig. 2. There are 4 arms in relation graph G, indexed by i = 1, 2, 3, 4. The combinatorial MAB problem is to select a maximum weighted independent set of arms where unknown   <span id="page-5-1"></span>Fig. 2. Convert combinatorial-play to single-play: constructing strategy relation graph SG(F,L) based on arm relation graph G bandit is weight. As shown in Fig. 2, the feasible strategy $$\mathbf{s}_{1} = \{1\}, \cup_{i \in \mathbf{s}_{1}} N_{i} = \{1, 2\}$$ $$\mathbf{s}_{2} = \{2\}, \cup_{i \in \mathbf{s}_{2}} N_{i} = \{1, 2, 3\}$$ $$\mathbf{s}_{3} = \{3\}, \cup_{i \in \mathbf{s}_{3}} N_{i} = \{2, 3, 4\}$$ $$\mathbf{s}_{4} = \{4\}, \cup_{i \in \mathbf{s}_{4}} N_{i} = \{3, 4\}$$ $$\mathbf{s}_{5} = \{1, 3\}, \cup_{i \in \mathbf{s}_{5}} N_{i} = \{1, 2, 3, 4\}$$ $$\mathbf{s}_{6} = \{1, 4\}, \cup_{i \in \mathbf{s}_{7}} N_{i} = \{1, 2, 3, 4\}$$ $$\mathbf{s}_{7} = \{2, 4\}, \cup_{i \in \mathbf{s}_{7}} N_{i} = \{1, 2, 3, 4\}$$ Taking s<sub>2</sub> and s<sub>5</sub> for illustration, the component arms of $s_2$ , i.e., $\{2\}$ , is a subset of $\bigcup_{i \in s_5} N_i = \{1, 2, 3, 4\}$ , and the component arms of $s_5$ , i.e., $\{1,3\}$ is also a subset of $\cup_{i\in\mathbf{s}_2}N_i=\{1,2,3\}$ . Therefore, the two strategies are connected in the relation graph SG. Consequently, we can convert the combinatorial-play MAB with side observation to a single-MAB with side observation. More specifically, taking each strategy as an arm, SG(F, L)is exactly a relation graph for com-arms in F. The problem turns into a single-play MAB problem where at each time slot the decision maker selects one com-arm from |F| ones to maximize her long-term reward. The algorithm is shown in Algorithm 2, and we derive the regret bound below directly. Theorem 2: The expected regret of Algorithm 2 after n time slots is bounded by $$\mathcal{R}_n \le 15.94\sqrt{n|F|} + 0.74\mathcal{C}\sqrt{n/|F|}.$$ (43) In the traditional distribution-free MAB by taking each comarm as an unknown variable [?], the regret bound would be $49\sqrt{n|F|}$ . Our theoretical result significantly reduces the regret and tightens the bound. <span id="page-6-2"></span>Distribution-Free Learning for combinatorial-play with side observation (DFL-CSO) 1: For each time slot $t = 0, 1, \dots, n$ Select a com-arm $s_x$ by maximizing <span id="page-6-8"></span> $$\overline{R}_{x,t} + \sqrt{\frac{\log\left(t/(KO_{x,t})\right)}{O_{x,t}}} \tag{42}$$ to pull - 2: UPDATE: for $y \in N_x$ do - 3: $O_{y,t+1} \leftarrow O_{y,t} + 1$ 4: $\overline{R}_{y,t+1} \leftarrow R_{y,t}/O_{y,t} + (1-1/O_{y,t})\overline{R}_{y,t}$ - <span id="page-6-0"></span>6: end for #### V. SINGLE-PLAY WITH SIDE REWARDS Though the single-play MAB with side reward have the same observation as the single-play MAB with side observation, the distinction on reward function makes the problem different. In the case of SSR, the reward function is side reward of the selected arm $I_t$ , instead of its direct reward. Here we treat the side reward of each arm as a new unknown random variable, i.e., we require to learn $B_{i,t}$ that is a combination of all direct rewards in $N_i$ . As direct rewards of arms in $N_i$ are observed asynchronously, we cannot update the observation on $B_{i,t}$ as the way in SSO where observation is symmetric between two neighboring nodes. The trick is updating the number of observation on $B_{i,t}$ only when direct rewards of all arm in $N_i$ are renewed. We use $O_{i,t}^b$ to denote this quantity to differ from $O_{i,t}$ which denotes the number of direct reward is observed. Therefore, whenever an arm is played or its neighbor is played, the number of observation on side reward $O_{i,t}^b$ can be updated only when the least frequently observed arm in $N_i$ is updated. That is, <span id="page-6-4"></span> $$O_{i,t}^b = \begin{cases} O_{i,t-1}^b + 1 & \text{if } \min_{j \in N_i} O_{j,t} \text{ is updated} \\ O_{i,t}^b & \text{Otherwise.} \end{cases}$$ (44) The algorithm for single-play MAB with side reward is summarized in Algorithm 3 where we directly use side reward $B_{i,t}$ as observation, and update $O_{i,t}^b$ according to (44). The regret bound of our proposed algorithm is presented in Theorem 3. <span id="page-6-5"></span>Theorem 3: The expected regret of Algorithm 3 after n time slots is bounded by <span id="page-6-6"></span> $$\mathcal{R}_n < 49K\sqrt{nK} \tag{46}$$ *Proof:* In this case, $B_{i,t} \in [0, K]$ , which indicates that the range of received reward is scaled by K at most. We normalize $B_{i,t} \in [0,1]$ . Using the same techniques in proof of MOSS algorithm [?], we get the normalized regret bound, and then the regret bound in (46) by scaling the normalized regret bound by K. In Algorithm 3, the number of observation times on side reward should be no less than the scenario without side observation. Therefore, Algorithm 3 would convergence to the optimality faster than the MOSS algorithm without side observation. <span id="page-6-3"></span>**Algorithm 3** Distribution-Free Learning policy for single-play with side reward (DFL-SSR) 1: For each time slot $t = 0, 1, \dots, n$ Select an arm i by maximizing $$\overline{B}_{i,t} + \sqrt{\frac{\log\left(t/(KO_{i,t}^b)\right)}{O_{i,t}^b}} \tag{45}$$ to pull - 2: for $k \in N_i$ do - 3: $O_{k,t+1} \leftarrow O_{k,t} + 1$ - 4: if $\min_{j \in N_k} O_{j,t}$ is updated - 5: $O_{k,t+1}^b = O_{k,t}^b + 1$ 6: $\overline{B}_{k,t+1} = \overline{B}_{k,t}/O_{k,t}^b + (1 1/O_{k,t}^b)\overline{B}_{k,t}$ - 8: end for - <span id="page-6-1"></span>9: end for #### VI. COMBINATORIAL-PLAY WITH SIDE REWARDS Now we consider the combinatorial-play case with side reward. Recall that in this scenario, it requires to select a comarm $s_x$ with maximum side reward, where the side reward is the sum of observed rewards of all arms neighboring to arms in $s_x$ . The case is more complicated than previous three cases, due to: 1) Asymmetric observations on side reward for neighboring nodes in one clique; 2) Probably exponential number of strategies caused arbitrary constraint. Therefore, it is complicated to analyze the regret bound if adopting the same techniques of combinatory-play with side observation. Instead of learning side reward of strategies directly, we learn the direct reward of arms that compose com-arms. <span id="page-6-7"></span>Algorithm Distribution-Free Learning policy combinatorial-play with side reward (DFL-CSR) 1: For each time slot $t = 0, 1, \dots, n$ Select a com-arm $s_x$ by maximizing $$\sum_{i \in Y_x} \left( \overline{X}_{i,t} + \sqrt{\frac{\max\left(\ln\frac{t^{2/3}}{KO_{i,t}}, 0\right)}{O_{i,t}}} \right) \tag{47}$$ to pull - 2: for $k \in Y_x$ do - 3: $O_{k,t+1} \leftarrow O_{k,t} + 1$ 4: $\overline{X}_{k,t+1} = \overline{X}_{k,t}/O_{k,t}^b + (1 1/O_{k,t}^b)\overline{X}_{k,t}$ - 5: end for - 6: end for <span id="page-6-9"></span>Theorem 4: The expected regret of Algorithm 4 after n time slots is bounded by $$\Re(n) \leq NK + \left(\sqrt{eK} + 8(1+N)N^3\right)n^{\frac{2}{3}} + (1 + \frac{4\sqrt{K}N^2}{e})N^2Kn^{\frac{5}{6}}.$$ (48) where $N \leq K$ is the maximum of $|Y_x|, x = 1 \dots |F|$ . *Proof:* See Appendix. <span id="page-7-2"></span><span id="page-7-1"></span> Fig. 4. Expected regret of DFL-CSO #### VII. SIMULATION Time slot (a) Sparse relation graph <span id="page-7-0"></span>In this section, we evaluate the performance of the proposed 4 algorithms in simulations. We mainly analyze the regret generated by each algorithm after a long time slot n = 10000. We first evaluate regret generated by DFL-SSO, and compare with MOSS learning policy. The experiment setting is as follows. We randomly generate a relation graph with 100 arms, each following an i.i.d random process over time with mean between [0, 1]. We then plot the accumulated regret and expected regret over time, as shown in Fig. [3\(a\).](#page-7-1) Though the expected regret over time by MOSS converges to a value around 0 that coincides with its theoretical bound in Fig. [3\(a\),](#page-7-1) it shows that its accumulated regret grows dramatically. It is oblivious the proposed algorithm with side information performs much better than MOSS, e.g., the accumulated regret and expected regret of our proposed algorithm (DFL-SSO) both converge to 0. For other 3 algorithms, as we first study the 3 variants of MAB problem, there are no candidate algorithms to compare. We show the trend of expected regret over time for each case. In evaluation of Algorithm 2, we note that the regret bound contains the terms: number of com-arms and number of cliques. The upper bound becomes huge if the number of com-arms is voluminous, and a small clique number can significantly reduce the bound. In order to investigate the impact experimentally, we then test for regret both under sparse relation graph and dense relation graph. In Fig. [4\(a\),](#page-7-2) where the arms are uniformly and randomly connected with a low probability of 0.3, it shows that the expected regret slowly increases beyond 0. While in Fig. [4\(b\),](#page-7-3) where the arms are uniformly and randomly connected with a higher probability  Time slot <span id="page-7-3"></span>(b) Dense relation graph <span id="page-7-4"></span>Fig. 5. Expected regret of DFL-SSR  <span id="page-7-5"></span>Fig. 6. Expected regret of DFL-CSR of 0.6, it shows that the expected regret gradually approaches 0. It implicates that the side observation indeed helps to reduce regret if one can observe more, even for the case that previous literature show that it will introduce exponential regret by learning each individual com-arm of a huge feasible strategy set [**?**]. The simulation results for Algorithm 3 and 4 are shown in Fig. [5](#page-7-4) and [6,](#page-7-5) where the expected regret in both figures converges to 0 dramatically. #### VIII. RELATED WORKS <span id="page-8-0"></span>The classical multi-armed bandit problem does not assume that existence of side bonus. More recently, [?] and [?] considered the networked bandit problem in the presence of side observations. They study single play case and propose several policies whose regret bound depends on $\Delta_{\min}$ , e.g., an arbitrarily small $\Delta_{\min}$ will invalidate the zero-regret result. In this work, we present the first distribution free policy for single play with side observation case. For the variant with combinatorial play without side bonus, Anantharam et al. [?] firstly consider the problem that exactly N arms are selected simultaneously without constraint among arms. Gai et al. recently extend this version to a more general problem with arbitrary constraints [?]. The model is also relaxed to a linear combination of no more than N arms. However, the results presented in [?] are distribution-dependent. To this end, we are the first to study combinatorial play case in the presence of side bonus. In particular, for the combinatorial play with side observation case, we develop a distribution-free zero regret learning policy. We theoretically show that this scheme converges faster than existing method. And for the combinatorial play with side reward case, we propose the first distribution-free learning policy that has zero-regret. #### IX. CONCLUSION <span id="page-8-1"></span>In this paper, we investigate networked combinatorial bandit problems under four cases. This is motivated by the existence of potential correlation or influence among neighboring arms. We present and analyze a series of zero regret polices for each case. In the future, we are interested in investigating some heuristics to improve the received regret in practice. For example, at each time slot, instead of playing the selected arm/strategy with maximum index value (Equation (5), (42)), we will play the arm/strategy that has maximum experimental average observation among the neighbors of $I_t$ . Therefore, we ensure that the received reward is better than the one with maximum index value. #### X. APPENDIX ## A. Proof of Theorem 4 To prove the theorem, we will use Chernoff-Hoeffding bound and the maximal inequality by Hoeffding [?]. Lemma 1: (Chernoff-Hoeffding Bound [?]) $\xi_1,\ldots,\xi_n$ are random variables within range [0,1], and $E[\xi_t|\xi_1,\ldots,\xi_{t-1}]=\mu, \forall 1\leq t\leq n.$ Let $S_n=\sum \xi_i$ , then for all a>0 $$\mathbf{P}(S_n \ge n\mu + a) \le \exp\left(-2a^2/n\right),$$ $$\mathbf{P}(S_n \le n\mu - a) \le \exp\left(-2a^2/n\right).$$ (49) <span id="page-8-4"></span>Lemma 2: (Maximal inequality) [?] $\xi_1, \dots, \xi_n$ are i.i.d random variables with expect $\mu$ , then for any y > 0 and n > 0, $$\mathbf{P}\left(\exists \tau \in 1, \dots, n, \sum_{t=1}^{\tau} (\mu - \xi_t) > y\right) < \exp(-\frac{2y^2}{n}).$$ (50) Each com-arm $s_x$ and its neighboring arm set $Y_x$ actually compose a new com-arm, which could be denoted by $Y_x$ as $\mathbf{s}_x\subset Y_x$ . Each new com-arm $Y_x$ corresponds to a unknown bonus $CB_{x,t}$ with mean $\sigma_x$ . Recall that we have assumed $\sigma_1\geq\cdots\geq\sigma_{|F|}$ . As com-arm $Y_1$ is the optimal com-arm, we have $\Delta_x=\sigma_1-\sigma_x$ , and let $Z_x=\sigma_1-\frac{\Delta_x}{2}$ . We further define $W_1=\min_{1\leq t\leq n}W_{1,t}$ . We may assume the first time slot $z=\arg\min_{1\leq t\leq n}W_{1,t}$ . ### 1. Rewrite regret in terms of arms Separating the strategies in two sets by $\Delta_{x_0}$ of some comarm $\mathbf{s}_{x_0}$ (we will define $x_0$ later in the proof), we have <span id="page-8-2"></span> $$\mathfrak{R}_{n} = \sum_{x=1}^{x_{0}} \Delta_{x} E[T_{x,n}] + \sum_{x=x_{0}+1}^{|F|} \Delta_{x} E[T_{x,n}]$$ $$\leq \Delta_{x_{0}} n + \sum_{x=x_{0}+1}^{|F|} \Delta_{x} E[T_{x,n}]. \tag{51}$$ We then analyze the second term of (51). As there may be exponential number of strategies, counting $T_{x,n}$ of each comarm by the classic upper-confidence-bound analysis yields regret growing linearly with the number of strategies. Note that each com-arm consists of N arms at most, we can rewrite the regret in terms of arms instead of strategies. We then introduce a set of counters $\{\widetilde{T}_{x,n}|k=1,\ldots,K\}$ . At each time slot, either 1) a com-arm with $\Delta_x \leq \Delta_{x_0}$ or 2) a comarm with $\Delta_x > \Delta_{x_0}$ is played. In the first case, no $\widetilde{T}_{x,n}$ will get updated. In the second case, we increase $\widetilde{T}_{x,n}$ by 1 for any arm $k = \arg\min_{j \in Y_x} \{O_{j,t}\}$ . Thus whenever a com-arm with $\Delta_x > \Delta_{x_0}$ is chosen, exactly one element in $\{\widetilde{T}_{x,n}\}$ increases by 1. This implies that the total number that strategies of $\Delta_x > \Delta_{x_0}$ have been played is equal to sum of all counters in $\{\widetilde{T}_{x,n}\}$ , i.e., $\sum_{x=x_0+1}^{|F|} E[T_{x,n}] = \sum_{k=1}^K \widetilde{T}_{x,n}$ . Thus, we can rewrite the second term of (51) as $$\sum_{x=x_0+1}^{|F|} \Delta_x E[T_{x,n}] \le \Delta_X \sum_{x=x_0+1}^{|F|} E[T_{x,n}] \le \Delta_X \sum_{k=1}^K E[\widetilde{T}_{x,n}].$$ (52) Let $I_{k,t}$ be the indicator function that equals 1 if $\widetilde{T}_{x,n}$ is updated at time slot t. Define the indicator function $\mathbf{1}\{y\}=1$ if the event y happens and 0 otherwise. When $I_{k,t}=1$ , a com-arm $Y_x$ with $x>x_0$ has been played for which $O_{k,t}=\min\{O_{j,t}: \forall j\in Y_x\}$ . Then <span id="page-8-3"></span> $$\widetilde{T}_{x,n} = \sum_{t=1}^{n} \mathbf{1}\{I_{k,t} = 1\}$$ (53) $$\leq \sum_{t=1}^{n} \mathbf{1}\{W_{1,t} \leq W_{x,t}\} \tag{54}$$ $$\leq \sum_{t=1}^{n} \mathbf{1}\{W_1 \leq W_{x,t}\} \tag{55}$$ $$\leq \sum_{t=1}^{n} \mathbf{1}\{W_1 \leq W_{x,t}, W_1 \geq Z_x\}$$ (56) $$+\sum_{t=1}^{n} \mathbf{1}\{W_1 \le W_{x,t}, W_1 < Z_x\}$$ (57) $$=\widetilde{T}_{k,n}^1 + \widetilde{T}_{k,n}^2. \tag{58}$$ We use $\widetilde{T}_{k,n}^1$ and $\widetilde{T}_{k,n}^2$ to respectively denote Equation (56) and (57) for short. Next we show that both of the terms are bounded. 2. Bounding $\widetilde{T}_{k,n}^1$ Here we note the event $\{W_1 \geq Z_x\}$ and $\{W_{x,t} > W_1\}$ implies event $\{W_{x,t} > Z_x\}$ . Let $\ln_+(y) = \max(\ln(y),0)$ . For any positive integer $l_0$ , we then have, $$\widetilde{T}_{k,n}^1 \le \sum_{t=1}^n \mathbf{1}\{W_{x,t} \ge Z_x\}$$ (59) $$\leq l_0 + \sum_{t=l_0}^n \mathbf{1}\{W_{x,t} \geq Z_x, \widetilde{T}_{k,t}^1 > l_0\}$$ (60) $$= l_0 + \sum_{t=l_0}^n \mathbf{P}\{W_{x,t} \ge Z_x, \widetilde{T}_{k,t}^1 > l_0\}$$ (61) $$= l_{0} + \sum_{t=l_{0}}^{n} \mathbf{P} \left\{ \sum_{j \in Y_{x}} \left( \overline{X}_{j,t} + \sqrt{\frac{\ln_{+} \left( \frac{t^{2/3}}{KO_{j,t}} \right)}{l_{0}}} \right) \right.$$ $$\geq \sum_{j \in Y_{x}} \mu_{j} + \frac{\Delta_{x}}{2}, \widetilde{T}_{k,t}^{1} > l_{0} \right\}. \tag{62}$$ The event $$\left\{\sum_{j\in Y_x}\left(\overline{X}_{j,t}+\sqrt{\frac{\ln_+(t^{2/3}/KO_{j,t})}{O_{j,t}}}\right)\geq\sum_{j\in Y_x}\mu_j+\frac{\Delta_x}{2}\right\}$$ indicates that the following must be true, $$\exists j \in Y_x, \overline{X}_{j,t} + \sqrt{\frac{\ln_+(t^{2/3}/KO_{j,t})}{O_{j,t}}} \ge \mu_j + \frac{\Delta_x}{2N}.$$ (63) Using union bound one directly obtains: <span id="page-9-0"></span> $$\widetilde{T}_{k,n}^{1} \leq l_{0} + \sum_{t=l_{0}}^{n} \sum_{j \in Y_{x}} \mathbf{P} \left\{ \overline{X}_{j,t} + \sqrt{\frac{\ln_{+}(t^{2/3}/KO_{j,t})}{O_{j,t}}} \right\}$$ $$\geq \mu_{j} + \frac{\Delta_{x}}{2N} \right\}$$ (64) $$\leq l_{0} + \sum_{t=l_{0}}^{n} \sum_{j \in Y_{x}} \mathbf{P} \left\{ \overline{X}_{j,t} - \mu_{j} \right.$$ $$\geq \frac{\Delta_{x}}{2N} - \sqrt{\frac{\ln_{+}(t^{2/3}/KO_{j,t})}{O_{j,t}}} \right\}.$$ (65) Now we let $l_0 = 16N^2\lceil\ln(\frac{n^{3/4}}{K}\Delta_x^2)/\Delta_x^2)\rceil$ with $\lceil y \rceil$ the smallest integer larger than y. We further set $\delta_0 = e^{1/2}\sqrt{K/n^{2/3}}$ and set $x_0$ such that $\Delta x_0 \leq \delta_0 < \Delta_{x_0+1}$ . As $O_{j,t} \geq l_0$ , $$\ln_{+}\left(\frac{t^{3/4}}{KO_{j,t}}\right) \le \ln_{+}\left(\frac{n^{3/4}}{KO_{j,t}}\right) \le \ln_{+}(n^{3/4}/Kl_{0})$$ $$\le \ln_{+}\left(\frac{n^{3/4}}{K} \times \frac{\Delta_{x}^{2}}{16N^{2}}\right) \le \frac{l_{0}\Delta_{x}^{2}}{16N^{2}} \le \frac{O_{j,t}\Delta_{x}^{2}}{16N^{2}}.$$ (66) Hence we have. $$\frac{\Delta_x}{2N} - \sqrt{\frac{\ln_+(t^{3/4}/KO_{j,t})}{O_{j,t}}} \ge \frac{\Delta_x}{2N} - \frac{\Delta_x}{\sqrt{16N^2}} = c\Delta_x \qquad (67)$$ with $c = \frac{1}{2N} - \frac{1}{\sqrt{16N^2}} = \frac{1}{4N}$ . Therefor, using Hoeffding's inequality and Equation (65), and then plugging into the value of $l_0$ , we get, <span id="page-9-1"></span> $$\widetilde{T}_{k,n}^{1} \leq l_{0} + \sum_{t=l_{0}}^{n} \sum_{j \in Y_{x}} \mathbf{P} \left\{ \overline{X}_{j,t} - \mu_{j} \geq c \Delta_{x} \right\}$$ $$\leq l_{0} + \sum_{t=l_{0}}^{n} \sum_{j \in Y_{x}} \exp(-2O_{j,t}(c\Delta_{x})^{2})$$ $$\leq l_{0} + K \cdot n \cdot \exp(-2l_{0}(c\Delta_{x})^{2})$$ $$= 1 + 16N^{2} \frac{\ln(\frac{n^{3/4}}{K}\Delta_{x}^{2})}{\Delta_{x}^{2}} + K \cdot n \cdot \exp(-2\ln(n^{\frac{1}{12}}e)).$$ (68) As $\delta_0 = e^{1/2} \sqrt{K/n^{\frac{2}{3}}}$ and $\Delta_x > \delta_0$ , the second term in $$\frac{16N^2(1+\ln n^{1/12})}{Ke} \cdot n^{2/3} < \frac{16N^2(n^{2/3}+n^{3/4})}{Ke}$$ The last term of (68) is bounded by $$K \cdot n \cdot \exp(-2\ln(n^{\frac{1}{12}}e)) \le \frac{K}{e^2} \cdot n^{\frac{5}{6}}$$ Finally we get $$\widetilde{T}_{k,n}^{1} = 1 + \frac{16N^{2}(n^{2/3} + n^{3/4})}{Ke} + \frac{K}{e^{2}} \cdot n^{\frac{5}{6}}.$$ (69) # 3. Bounding $T_{k,r}^2$ <span id="page-9-3"></span> $$\widetilde{T}_{k,n}^{2} = \sum_{t=1}^{n} \mathbf{1}\{W_{1} \le W_{x,t}, W_{1} < Z_{x}\}$$ $$\le \sum_{t=1}^{n} \mathbf{P}\{W_{1} < Z_{x}\} \le n\mathbf{P}\{W_{1} < Z_{x}\}.$$ (70) Remember that at time slot z, we have $W_1 = \min W_{1,t}$ . For the probability $\{W_1 < Z_x\}$ of fixed x, we have <span id="page-9-2"></span> $$\mathbf{P}\{W_1 < \sigma_1 - \frac{\Delta_x}{2}\}\tag{71}$$ $$= \mathbf{P} \left\{ \sum_{j \in N_1, j=1}^{N} w_{j,z} < \sigma_1 - \frac{\Delta_x}{2} \right\}$$ (72) $$\leq \sum_{j \in N_1} \mathbf{P} \left\{ w_{j,z} < \mu_j - \frac{\Delta_x}{2N} \right\}. \tag{73}$$ We define function $f(u) = e \ln(\sqrt{\frac{n^{1/3}}{K}}u)/u^3$ for $u \in$ $[\delta_0, N]$ . Then we have, $$\mathbf{P}\left\{w_{j,z} < \mu_{j} - \frac{\Delta_{x}}{2N}\right\}$$ $$= \mathbf{P}\left\{\exists 1 \leq l \leq n : \sum_{\tau=1}^{l} \left(X_{j,\tau} + \sqrt{\frac{\ln_{+}(\frac{\tau^{2/3}}{Kl})}{l}}\right) < l\mu_{j} - \frac{l\Delta_{x}}{2N}\right\}$$ $$\leq \mathbf{P}\left\{\exists 1 \leq l \leq n : \sum_{\tau=1}^{l} (\mu_{j} - X_{j,\tau}) > \sqrt{l\ln_{+}(\frac{\tau^{2/3}}{Kl})} + \frac{l\Delta_{x}}{2N}\right\}$$ $$\leq \mathbf{P}\left\{\exists 1 \leq l \leq f(\Delta_{x}) : \sum_{\tau=1}^{l} (\mu_{j} - X_{j,\tau}) > \sqrt{l\ln_{+}(\frac{\tau^{2/3}}{Kl})}\right\}$$ $$+ \mathbf{P}\left\{\exists f(\Delta_{x}) < l \leq n : \sum_{\tau=1}^{l} (\mu_{j} - X_{j,\tau}) > \frac{l\Delta_{x}}{2N}\right\}. \tag{74}$$ For the first term we use a peeling argument with a geometric grid of the form $\frac{1}{2^{g+1}}f(\Delta_x) \leq l \leq \frac{1}{2^g}f(\Delta_x)$ : $$\mathbf{P} \bigg\{ \exists 1 \le l \le f(\Delta_{x}) : \sum_{\tau=1}^{l} (\mu_{j} - X_{j,\tau}) \\ > \sqrt{l \ln_{+}(\frac{\tau^{2/3}}{Kl})} \bigg\}$$ $$\le \sum_{g=0}^{\infty} \mathbf{P} \bigg\{ \exists \frac{1}{2^{g+1}} f(\Delta_{x}) \le l \le \frac{1}{2^{g}} f(\Delta_{x}) : \sum_{\tau=1}^{l} (\mu_{j} - X_{j,\tau}) \\ > \sqrt{\frac{f(\Delta_{x})}{2^{g+1}} \ln_{+}(\frac{\tau^{2/3} 2^{g}}{Kf(\Delta_{x})})} \bigg\}$$ $$\le \sum_{g=0}^{\infty} \exp \bigg( -2 \frac{f(\Delta_{x}) \frac{1}{2^{g+1}} \ln_{+}(\frac{\tau^{2/3} 2^{g}}{Kf(\Delta_{x})})}{f(\Delta_{x}) \frac{1}{2^{g}}} \bigg)$$ $$\le \sum_{s=0}^{\infty} \bigg[ \frac{Kf(\Delta_{x})}{n^{2/3}} \frac{1}{2^{g}} \bigg] \le \frac{2Kf(\Delta_{x})}{n^{2/3}} \tag{75}$$ where in the second inequality we use Lemma 2. As the special design of function f(u), we have f(u) takes maximum of $\frac{n^{1/2}}{3K^{3/2}}$ when $u=e^{1/3}\sqrt{K/n^{1/3}}$ . For $\Delta_x>e^{1/3}\sqrt{K/n^{1/3}}$ , we have $$\frac{2Kf(\Delta_x)}{n^{2/3}} \le \frac{2}{3\sqrt{K}}n^{-1/6}. (76)$$ For the second term we also use a peeling argument but with a geometric grid of the form $2^g f(\Delta_x) \leq l < 2^{g+1} f(\Delta_x)$ : <span id="page-10-0"></span> $$\mathbf{P}\left\{\exists f(\Delta_x) < l \le n : \sum_{\tau=1}^{l} (\mu_j - X_{j,\tau}) > \frac{l\Delta_x}{2N}\right\}$$ $$\le \sum_{g=0}^{\infty} \mathbf{P}\left\{\exists 2^g f(\Delta_x) \le l \le 2^{g+1} f(\Delta_x) : \sum_{\tau=1}^{l} (\mu_j - X_{j,\tau})\right\}$$ $$> \frac{2^{g-1} f(\Delta_x) \Delta_x}{N}\right\}$$ $$\le \sum_{g=0}^{\infty} \exp\left(\frac{-2^g f(\Delta_x) \Delta_x^2}{4N^2}\right)$$ $$\le \sum_{g=0}^{\infty} \exp\left(-(g+1) f(\Delta_x) \Delta_x^2/4N^2\right)$$ $$= \frac{1}{\exp(f(\Delta_x) \Delta_x^2/4N^2) - 1}.$$ (77) We note that $f(u)u^2$ has a minimum of $\frac{e}{\sqrt{K}}n^{1/6}$ when $u=x_0$ . Thus for (77), we further have, $$\frac{1}{\exp(\frac{f(\Delta_x)\Delta_x^2}{4N^2}) - 1} \le \frac{1}{\exp(\frac{en^{1/6}}{4\sqrt{K}N^2}) - 1} \le \frac{4\sqrt{K}N^2n^{-\frac{1}{6}}}{e}.$$ (78) Combining (73) and (70), we then have $$\widetilde{T}_{k,n}^2 \le \frac{2Nn^{5/6}}{3\sqrt{K}} + \frac{4\sqrt{K}N^3n^{5/6}}{e} \le (1 + \frac{4\sqrt{K}N^2}{e})Nn^{\frac{5}{6}}.$$ (79) #### 4. Results without dependency on $\Delta_{\min}$ Summing $\widetilde{T}_{k,n}^1$ and $\widetilde{T}_{k,n}^2$ , we have $$\widetilde{T}_{x,n} \leq \widetilde{T}_{k,n}^{1} + \widetilde{T}_{k,n}^{2}$$ $$= 1 + \frac{16N^{2}}{Ke} (1 + \frac{8N}{15}) n^{\frac{2}{3}} + (1 + \frac{4\sqrt{K}N^{2}}{e}) N n^{\frac{5}{6}}$$ and using $\Delta_{X} \leq N$ and $\Delta_{x} \leq \delta_{0}$ for $x \leq x_{0}$ , we have $$\Re(n) \leq \sqrt{Ken^{\frac{2}{3}}} + NK \left[1 + \frac{16N^{2}}{e} (1 + \frac{8N}{e})n^{\frac{2}{3}} \right]$$ $$\begin{split} \Re(n) & \leq \sqrt{Ke} n^{\frac{2}{3}} + NK \left[ 1 + \frac{16N^2}{Ke} (1 + \frac{8N}{15}) n^{\frac{2}{3}} \right. \\ & + \left( 1 + \frac{4\sqrt{K}N^2}{e} \right) Nn^{\frac{5}{6}} \right] \\ & \leq NK + \left( \sqrt{eK} + 8(1+N)N^3 \right) n^{\frac{2}{3}} \\ & + \left( 1 + \frac{4\sqrt{K}N^2}{e} \right) N^2 Kn^{\frac{5}{6}}. \end{split}$$