Prophetnet Ads A Looking Ahead Strategy For Generative Retrieval Models In Sponsored Search Engine

Abstract.

Section titled “Abstract.”In a sponsored search engine, generative retrieval models are recently proposed to mine relevant advertisement keywords for users’ input queries. Generative retrieval models generate outputs token by token on a path of the target library prefix tree (Trie), which guarantees all of the generated outputs are legal and covered by the target library. In actual use, we found several typical problems caused by Trieconstrained searching length. In this paper, we analyze these problems and propose a looking ahead strategy for generative retrieval models named ProphetNet-Ads. ProphetNet-Ads improves the retrieval ability by directly optimizing the Trie-constrained searching space. We build a dataset from a real-word sponsored search engine and carry out experiments to analyze different generative retrieval models. Compared with Trie-based LSTM generative retrieval model proposed recently, our single model result and integrated result improve the recall by 15.58% and 18.8% respectively with beam size 5. Case studies further demonstrate how these problems are alleviated by ProphetNet-Ads clearly.

Keywords: Sponsored Search Engine · Generative Retrieval Model · Keywords Extension · Information Retrieval · Natural Language Generation

1 Introduction

Section titled “1 Introduction”In a sponsored search engine, search queries from the user are expanded to appropriate advertisements (Ads) keywords. Advertisers bid on triggered keywords to display their ads and pay by click. The primary income for a sponsored search engine is to provide ads that users potentially need. Therefore the applications

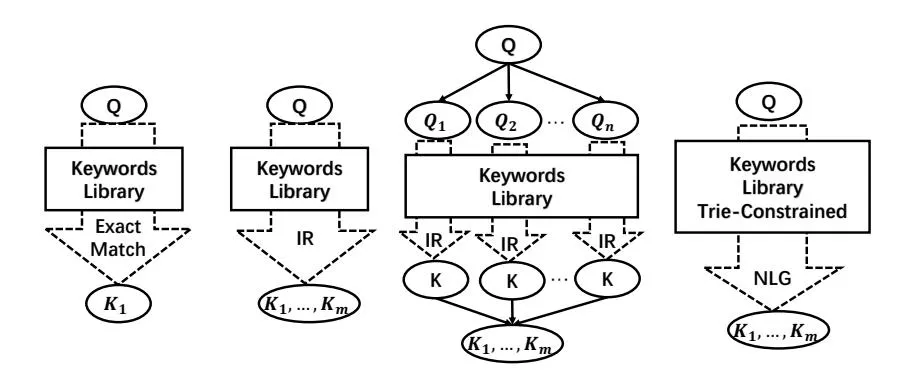

of keywords extension from queries to relevant keywords in the ads library are deeply concerned. At the beginning, search engines trigger ads when the queries are identical with an ads keyword. Then, methods like Information retrieval (IR) with quality filter [4] are commonly used to recall more relevant keywords. However, traditional IR techniques are unable to fill the semantic gap between queries and ads keywords. Thus sponsored search engines pay much attention on how to excavate more semantic-related keywords. A solution is to re-write the initial user queries to a range of intermediate queries and then combine all the outcomes retrieved from them, such as [5] from Yahoo, [11] from Google, and [1] from Microsoft. Re-writing strategies are widely used because directly extending queries to keywords will lead to the low-efficiency problem: very few extensions are included in the keywords library. Recently [9] used Trie-based LSTM model to address this problem by constraining the generation searching space. Trie means a prefix tree. Trie-based NLG models generate tokens on paths of a Trie to make sure outputs are covered by the keywords library.The models used for keywords extension task in different stages are shown in Figure1.

Fig. 1. The models used for keywords extension task. Firstly, triggered ads keywords are the exact match of users’ query. Secondly, information retrieval techniques are used to mine similar ads keywords. Thirdly, users’ queries are re-written into intermediate queries to do IR, which alleviate the semantic gap between queries and ads keywords. Recently, generative retrieval models are used for keywords extension task, which ensures all the generated keywords all covered by the target library because of the constrained searching space.

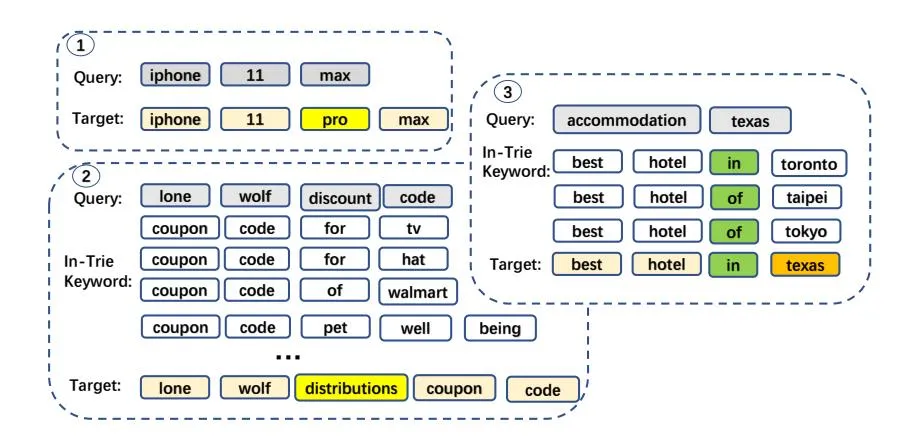

However, simply adding a Trie constraint to a natural language generation (NLG) model is not enough, and we found several common problems in daily use. The biggest problem of Trie-based generative retrieval model is that it cannot utilize global information. We list three examples in Figure 2. The first problem is that noise tokens will have a very low generation score thus lead to a wrong searching path. A second common problem is called “common prefix has no target object in the future tokens”, which implies that the entire beam search space is filled with common prefixes. Although these prefixes may compose good keywords, sometimes expected suffixes are not in the Trie to compose desired keywords. We cannot simply throw these sequences from beam search unfinished queue as these prefixes are really “common” and take a portion of good results. The last problem is that models are hard to decide which one is better when several tokens have similar high generation scores. For keywords extension, models have no idea which suffix will lead to desired keywords in a Trie.

Fig. 2. In example 1, “pro” is a noise word with low generation score if NLG model is not trained on this data. In example 2, generative retrieval models will be easily trapped into the common prefix “coupon code xxx”. In example 3, both “in” and “of” have high generation scores but “of” has no desired suffix “texas’.

Inspired by ProphetNet[15], which is able to predict next several tokens simultaneously, we propose ProphetNet-Ads to alleviate above problems with the future information. ProphetNet is proposed as a new pre-training architecture to predict next n-grams. ProphetNet-Ads employs the future tokens’ scores to look ahead several steps in the Trie, which directly optimizes the searching space. With Trie-based beam search, the next token to generate is constrained to possible suffixes of the decoded hypothesis according to the Trie. ProphetNet-Ads is proposed for better selection of the suffixes. ProphetNet-Ads modifies the predicting tokens’ scores as a weighted sum of its generation score and future information scores to optimize the searching space. We rank the decoding hypothesis with the modified scores, but store the unchanged sentence scores, which optimizes searching space and meantime keeps the scores consistent to original NLG model. The experimental results show that our proposed strategies recall more relevant keywords with an obvious improvement. Case studies further demonstrate how ProphetNet-Ads alleviates these typical problems.

2 Background

Section titled “2 Background”ProphetNet

Section titled “ProphetNet”ProphetNet[15] is recently proposed as a new pretraining NLG architecture. To alleviate strong local correlations such as bi-gram combination and enhance the hidden states to contain more global information, next n-grams are trained to predict. ProphetNet employs n-stream self-attention to support next n-grams from any starting positions in a given output are trained to predict simultaneously. Although next n-grams are explicitly used in the training procedure, only the next first token is predicted in the inference procedure like traditional NLG models. These future tokens’ scores can be used to point out whether the next first token has desired information in a Trie.

Trie-based NLG

Section titled “Trie-based NLG”A Trie is a prefix tree, and a path from the starting token to an internal node denotes a prefix of a sequence, a path from the starting token to a leaf node denotes a complete sequence. Suppose the already decoded token sequence is a prefix of a legal keyword sequence, then it must be a route in Trie, and we generate next tokens from the suffix nodes of this route. In this manner, all of the generated outputs are in-library. Trie-based inference have been successfully used in NLG tasks in recent years [6,16,7,9]. [6] firstly used Trie to constrain the model output candidates for email replying task. It can also been seen as picking responses from already given sentences in a Trie for any given email.

Keywords Extension for Sponsored Search Engine

Section titled “Keywords Extension for Sponsored Search Engine”Sponsored search engine service providers are deeply concerned with the task of extending users’ input queries into ads keywords. Researches are carried out to fill the semantic gap between queries and ads keywords. One solution is to re-write the initial user queries to intermediate queries to retrieve keywords, such as [1,5,11]. With the improvement of NLG techniques, [3] used LSTM to train the re-writing model, utilizing the deep learning network for better semantic modeling ability. [8] from Microsoft directly trained a NLG model to generate candidate ads keywords. Even though the NLG model’s outputs are highly qualified, however, they have a high likelihood to be out of the target set. Recently [9] used Trie-based NLG model to overcome the low-efficiency barrier by restricting the search space, and this methodology brought a considerable enhancement for their system with an additional 10% revenue each year.

3 ProphetNet-Ads

Section titled “3 ProphetNet-Ads”Based on ProphetNet which is able to predict more future tokens, we propose an explicit looking ahead strategy named ProphetNet-Ads as a possible solution for problems discussed in the introduction. ProphetNet-Ads modifies the scores of the next first predicting tokens by looking ahead future tokens’ scores and directly optimizes the searching space. Figure3 shows an illustration of ProphetNet-Ads generation procedure.

ProphetNet-Ads modifies the in-Trie suffix tokens’ scores with the information of its future tokens when beam searching on a Trie. We look ahead ` steps, where is usually n-1 for a ProphetNet n-gram generation model, since we can generate n tokens simultaneously, the next first predicting token, and n-1 future tokens to look ahead for this suffix. A residual weight is set to control the weight of next token’s generation score and its looking ahead score.

Fig. 3. An example of Bi-gram ProphetNet-Ads. When generating next token for “the best hotel”, “in” and “of” are its suffix tokens according to the Trie. Though both of them are good suffixes to generate, “of” has no future tokens with high score, while future tokens of “in” cover desired token “texas”. Thus “in” is generated.

As shown in Figure 3, a Bi-gram ProphetNet is able to generate next two tokens’ generation scores at each time step, and we can call them . We refer the previous decoded sequence as seq, and next first suffixes of seq as s1. For each node in s1, one step further suffixes of are noted as s2. The generation score of next first token is modified as:

(1)

For example, the step scores for the suffixes we are predicting from Figure 3 are modified as:

(2)

Similarly, a n-gram generation model could output the probability distributions of next n tokens as . We use a recursive function to modify their scores from the furthest to the nearest next first tokens’ scores. Scores of are modified with their highest children nodes’ scores in , and then be used

Algorithm 1: N-gram ProphetNet-Ads Trie-based Searching

input : Beam Size b, n-gram ProphetNet P, Trie T, Residual weight λ, Input query X, max output token length loutput: Keywords extensions πalive buffer: H ← ∅ ; finished buffer: π ← ∅ ; // with [hypothesis, scores]put [bos, score bos] in H ; // Initialize the alive bufferwhile best alive score ≥ worst alive score and decoded length < l do Osen ← ∅ ; // Original sentence scores to be stored in H Msen ← ∅ ; // Modified sentence scores to be ranked temporarily for seq in H do [g1,g2,...,gn] ← P(seq, X) ; // Next future n tokens' scores s1, m1 ← T(seq) ; // s1: suffix tokens, m1: mask vector Otoken = Mtoken = g1 + m1 ; // Mask the tokens out of Trie for ρ1 in s1 ; // Start looking ahead do s2, m2 ← T(seq + ρ1); for ρ2 in s2 ; // Could be replaced with recursive function do s3, m3 ← T(seq + ρ1 + ρ2) ; for ρ... in s... do ...; for ρn−1 in sn−1 do // Modify scores from the farthest nodes sn, mn ← T(seq + ρ1 + ρ2 + ... + ρn−1); gn−1[ρn−1] = λ × gn−1[ρn−1] + (1 − λ) × max(gn + mn) ; end ...; end g2[ρ2] = λ × g2[ρ2] + (1 − λ) × max(g3 + m3); end // Modify scores until the next first token Mtoken[ρ1] = λ × Otoken[ρ1] + (1 − λ) × (max(g2 + m2)); end // Calculate new sentence scores with previous decoded score and next first tokens' step score O ← func(seq.score, Otoken) put O into Osen ; // Original scores M ← func(seq.score,Mtoken) put M into Msen // Modified scores end // Rank with modified scores but store their original scores new seqs, id ← top b of(Msen) ; new f inished seqs, id f ← top b of(π.socres,Msen.eos) ; H ← new seqs, Osen[id] ; π ← new f inished seqs, Osen[id f];endreturn π;to modify gn−2, until next first tokens’ scores g1 are modified. Then, the best token in g1 is chosen. Considering a high-confidence suffix before explicit looking ahead strategy, if it has no good tokens steps further, a low future score will be passed backward. On the opposite if there are any noise tokens in suffix but with expected tokens in the future, further high-confidence scores will also be passed across the noise to give a bonus for the token we are predicting.

However, if we directly use the modified generation tokens’ score g1 to calculate decoded sequence scores in beam search, results are inconsistent with the generation model as it modifies the output sequences scores, which could bring error accumulation. Thus, we only use the modified scores to rank and pick the best sequences, but store their original scores. ProphetNet-Ads not only optimizes the searching space but also keeps the scores consistent to the generation model. The algorithm of ProphetNet-Ads is described in Algorithm1.

4 Experiment

Section titled “4 Experiment”In this section, we introduce the dataset and implementations of models to validate ProphetNet-Ads. Since ProphetNet only releases its Bi-gram uncased pretrainied checkpoint5 for now, for fair comparison, in this paper the Uni-gram to Tri-gram ProphetNet or ProphetNet-Ads are finetuned in ProphetNet architecture but without pretraining.

4.1 Dataset

Section titled “4.1 Dataset”The keywords extension dataset is collected from Bing search engine keywords extension library, formed as “query, triggered ads keyword” pairs. They are collected from advertisers, human labelling, searching log history, high quality extensions from old algorithms, etc. 260 million keywords are used to build a Trie as the searching space. After a quality model and Trie-filtering, we randomly select one million high-qualified training data and ten thousand testing data. The average length for target keywords after WordPiece tokenization is 6.69 and the average length for training data query input is 4.47. Each query from the testing data has at least one associated ads keyword, but we are unsure of how many other related keywords it has in the Trie. In actual use for a sponsored search engine, a number of relevant keywords are generated for a given query for further filtering and subsequent processing. More relevant keywords are recalled is concerned. Under this setting, we use recall rate to compare different models. MAP(mean average precision) is also included for comparison in the main results Table 1.

4.2 Model Settings

Section titled “4.2 Model Settings”We implement both traditional IR algorithm BM25 and a list of generative retrieval models as our baseline. Okapi BM25 [12] is a traditional IR strategy, with

5 https://github.com/microsoft/ProphetNet

the word tokenization of nltk[10] and parameters as k1 = 1.2, b = 0.75, = 0.25. Second type baseline is Trie based LSTM models as proposed by [9]. A 4-layer encoder, 4-layer decoder uni-directional LSTM+Trie model is implemented according to the complex model for offline use of [9]. Improvements are added based on it. We change the uni-directional LSTM encoder to bi-directional LSTM encoder to validate the effects of encoding bi-directional information. Copy mechanism[2,13] gives a bonus to generation scores of those words appear in the input sequence. Output keywords often have some overlap with the input queries, and copy mechanism allows model to directly pick some tokens from the input to compose the answer, which improves the generation ability for overlapped tokens. We train ProphetNet large [15] models with copy mechanism as the third baselines. ProphetNet-Ads shares the same checkpoint as ProphetNet baselines, with additional proposed optimization by looking ahead.

All generative retrieval models use a same 30,000 words vocabulary with WordPiece [14] tokenization and share the same Trie. The LSTM based models are implemented according to [13], and trained for 10 epochs. ProphetNet and ProphetNet-Ads are implemented according to [15], trained with learning rate 3e-4, 5 epochs. Other hyper-parameters are same to the referenced models. Training batch sizes are all set to 36, with a maximum input token length of 20 and a maximum output length of 20.

4.3 Results Analyze

Section titled “4.3 Results Analyze”| Model | R@5 R@10 R@15 R@20 MAP@5 MAP@10 MAP@15 MAP@20 | |||||

|---|---|---|---|---|---|---|

| BM25 | 27.86 33.40 37.30 39.13 | 0.2051 | 0.2125 | 0.2156 | 0.2166 | |

| LSTM | 62.47 71.81 75.63 77.76 | 0.5716 | 0.6267 | 0.6442 | 0.6534 | |

| Bi-LSTM | 63.28 72.28 76.21 78.13 | 0.5770 | 0.6292 | 0.6479 | 0.6563 | |

| Bi-LSTM+Copy | 67.37 76.12 79.40 83.37 | 0.6114 | 0.6616 | 0.6755 | 0.6811 | |

| Uni-gram ProphetNet 75.00 82.50 84.90 86.50 | 0.6929 | 0.7362 | 0.7461 | 0.7526 | ||

| Tri-gram ProphetNet 75.48 83.08 85.45 86.68 | 0.6974 | 0.7426 | 0.7518 | 0.7565 | ||

| ProphetNet-Ads | 78.05 84.28 86.24 87.54 | 0.7133 | 0.7472 | 0.7542 | 0.7580 | |

| Merged Tri+Tri-Ads | 81.34 86.83 88.45 89.39 | / | / | / | / | |

| Merged Above | 86.56 90.11 91.34 92.15 | / | / | / | / |

Table 1. Comparison with traditional IR algorithm BM25 and generative retrieval models. Results include recently proposed Trie-based LSTM model and its enhanced variants, ProphetNet generative retrieval model and ProphetNet-Ads. ProphetNet-Ads uses same checkpoint as Tri-gram ProphetNet, with looking ahead optimization. Merged Tri+Tri-Ads means the results merged with Tri-gram ProphetNet and ProphetNet-Ads. R@x for generation model means recall of generation procedure with beam size x, for BM25 means recall of top x of the IR results.

We analyze different keywords extension models according to the results in Table 1. Firstly, we can easily draw the idea that traditional IR algorithm like BM25 is not suitable for keywords extension task, since it cannot fill the semantic gap. Compared with LSTM with the beam size 5, replacing encoder with bidirectional LSTM could improve the recall by 0.81% and adding copy mechanism could improve the recall by 4.09% further. Copy mechanism enhances the results obviously, because the keywords are likely to cover some same words as the input query, copy mechanism enables model to directly fetch some words or word pieces from the input, which is a strong assistant to our model. Compared to the LSTM variants, Uni-gram ProphetNet which is similar to Transformer, improves recall by 7.63%. This is mainly because the stacked Transformer architecture are deeper and keywords extension task has a big training corpus, with a large amount of features and information for the generation model to capture and learn. Tri-gram ProphetNet improves the recall by 0.48%, which shows that trained to predict more future tokens helps NLG ability even the future tokens are not explicitly used. ProphetNet-Ads uses the same trained model as Tri-gram ProphetNet, and improves the recall by 2.57% further. This shows that optimizing searching space in the inference procedure could help a generative retrieval model a lot, and our proposed looking ahead strategy can optimize it effectively by incorporating future information. Merged result is more concerned by sponsored search engine for offline use. From the merged results we observe that, with the same one million training data, integrating different searching space optimization models can generate more satisfactory results.

With the comparison between our models and the baseline models, we see that our proposed looking ahead strategies improve the results obviously. It shows that simply using Trie to constrain the searching space is not enough, and our looking ahead strategies can optimize the searching space and help the keywords extension task effectively.

4.4 Ablation Analyze

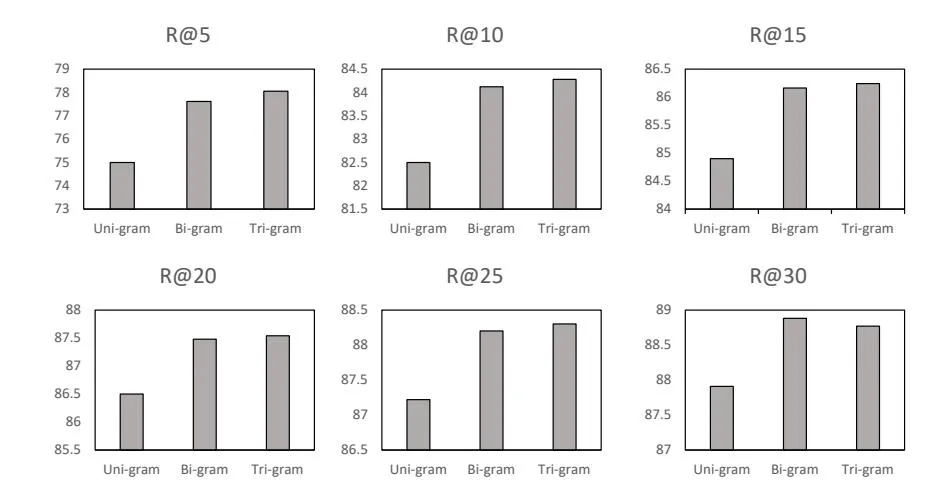

Section titled “4.4 Ablation Analyze”In this part, we will analyze the choice of how many tokens to predict as the n for n-gram ProphetNet-Ads and the choice of residual weight λ.

Firstly, we discuss the choice of n with Figure 4. Compared with the Uni-gram model, we obverse that looking ahead one future token significantly improves the results and the benefit of looking further is limited. It is due to the short length of target keywords. Most of the problems could be alleviated even with one token to look ahead. We can also see in the case study section4.5 that one length noise token is common for keywords extension. Thus we do not carry experiments for n ≥ 4.

Secondly, we discuss options for the residual weight of λ. We conduct results for a Bi-gram model with λ equals 0.4, 0.6, 0.8. Results can be seen from Table 2. We observe that using λ = 0.6 or λ = 0.8 reaches comparable results. This result is reasonable. Firstly, λ = 0.6 or 0.8 reaches the balance between maintaining sufficient representation for the decoding token and using future information to assist. Further, no matter what value λ is, it is used to modify the ranking score rather than real sentence score, thus as long as one sequence is put into the alive buffer, the same NLG model-consistent sentence score is recorded. Thus

Fig. 4. Results of different grams to predict. Improvement is significant by looking ahead one token, but benefit is limited by looking ahead more.

our strategy is robust to the choice of hyper-parameter λ.In other chapters of the paper, explicit n-gram strategies uses λ as 0.8.

| λ | R@5 R@10 R@15 R@20 R@25 R@30 | |||||

|---|---|---|---|---|---|---|

| 0.4 76.31 | 82.03 | 84.23 | 85.54 | 86.22 | 86.89 | |

| 0.6 78.13 | 84.09 | 86.07 | 87.23 | 87.89 | 88.65 | |

| 0.8 77.54 | 84.14 | 86.15 | 87.44 | 88.17 | 88.88 |

Table 2. Results for different residual weight λ for a Bi-gram model.

4.5 Case Study

Section titled “4.5 Case Study”In this section, we discuss on how ProphetNet-Ads helps to solve the problems in the generative retrieval model with actual cases. We list three examples that the best baseline model, Tri-gram ProphetNet, failed to find golden ads keywords with the beam size 30 and our model could successfully generate with the beam size 5.

In the first case of “lone wolf discount code”, baseline model fails on generating the desired keyword with the prefix “lone wolf distributors”. “distributors” in this case is a noise token for NLG model and baseline model fails to skip the noise. Meanwhile, baseline model search space is filled with the common prefix “coupon code” and finally ending in a range of low-scored outputs because “coupond code” does not have “lone wolf” related suffixes. Baseline model will never achieve “lone wolf discount” with an increasing beam size in this scenario

| input: | Baseline: |

|---|---|

| lone wolf discount code | lone wolf coupon code |

| golden: | lone wolfs |

| lone wolf distributors coupon code | coupon code discount |

| Ours: | lone wolf car rentals |

| lone wolf coupon code | coupon code coupon code |

| lone wolf distributors coupon code | coupon code contact |

| lone wolf distributors discount code | |

| lone wolf distributors promotional code | coupon code pet well being |

| lone wolf distributors promotional codes coupon code athleta yoga | |

| input: | Baseline: |

| kalathil resort | kalathil resort |

| golden: | kalamata resort |

| kalathil lake resort | kalahari hotel |

| Ours: | resort kalahari |

| kalathil resort | khao resort |

| kalathil lake resort | koh samui resorts |

| kalathi lake resorts | |

| kalathil lake | khao lak resort khao lak hotel |

| kalathil lake resort india | koh samui all inclusive holiday |

| input: | Baseline: |

| workmans car insurance | workmans auto insurance quote |

| golden: | worx products |

| workmen auto insurance | walmart car insurance rates |

| Ours: | workman islington |

| workmen auto insurance | walmart auto insurance quote |

| workmens auto car insurance | walmart auto insurance toronto |

| car insurance man | |

| car insurance driver women | worxs website call |

| workmans auto insurance quote | worx warranty registration usa |

Table 3. Extensions of queries from ProphetNet-Ads and baseline model.

unless we cut Trie’s “coupon code” brunch and foresee that “lone wolf distributors” prefix will contain correct information in future tokens. Looking ahead strategies assist in avoiding a optimal local trap in a generative retrieval model, skipping the noise token “distributors”, and finally generates all five extensions reasonable.

In the second case of keywords extensions of “kalathil resort”, we can see that “kalathil” actually means “kalathil lake” in India. However, “kalathil” is a lake which is an unknown information for a generative retrieval model. Baseline method generates a lot of extensions resembling the input query, but most of them are wrong. Our model implicitly knows the combination of “kalathil lake”, by looking ahead. Looking ahead strategies allow generative retrieval model to find a proper path with golden target information to go.

In the last case of keywords extensions of “workmans car insurance”, two difficulties are there for generative retrieval models: “workmen” is misspelled as “workmans” and synonym words “car” and “auto” used in the query and outputs. Both of the models are powerful enough to learn that “car” and “auto” are synonymous, but baseline model fails in generating “workmen”. It is because no sufficient data about misspelled “workmans” and correct “workmen” are provided in the training corpus. But our model successfully generate it by looking ahead future information. Other extensions are also more reasonable than baseline ones, with diverse prefix “workmen”, “workmans” and “car insurance”, which also show the strong retrieval ability of our model.

5 Conclusion

Section titled “5 Conclusion”In this work, we investigate the weakness of present generative retrieval models and propose ProphetNet-Ads to improve the retrieval ability. For the experiments, we collect a keywords extension dataset from a real-world search engine. We carry experiments on the recently proposed Trie-based LSTM generation model and other variants of generative retrieval models to analyze generative retrieval models in keywords extension task. Experimental results show that ProphetNet-Ads brings significant improvement over the recall and MAP metrics.

References

Section titled “References”-

1. Gao, J., He, X., Xie, S., Ali, A.: Learning lexicon models from search logs for query expansion. In: Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning. pp. 666–676. Association for Computational Linguistics (2012)

-

2. Gu, J., Lu, Z., Li, H., Li, V.O.: Incorporating copying mechanism in sequence-tosequence learning. arXiv preprint arXiv:1603.06393 (2016)

-

3. He, Y., Tang, J., Ouyang, H., Kang, C., Yin, D., Chang, Y.: Learning to rewrite queries. In: Proceedings of the 25th ACM International on Conference on Information and Knowledge Management. pp. 1443–1452. ACM (2016)

-

4. Hillard, D., Schroedl, S., Manavoglu, E., Raghavan, H., Leggetter, C.: Improving ad relevance in sponsored search. In: Proceedings of the third ACM international conference on Web search and data mining. pp. 361–370. ACM (2010)

-

5. Jones, R., Rey, B., Madani, O., Greiner, W.: Generating query substitutions. In: Proceedings of the 15th international conference on World Wide Web. pp. 387–396. ACM (2006)

-

6. Kannan, A., Kurach, K., Ravi, S., Kaufmann, T., Tomkins, A., Miklos, B., Corrado, G., Lukacs, L., Ganea, M., Young, P., et al.: Smart reply: Automated response suggestion for email. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. pp. 955–964. ACM (2016)

-

7. Laddha, A., Hanoosh, M., Mukherjee, D.: Understanding chat messages for sticker recommendation in hike messenger. arXiv preprint arXiv:1902.02704 (2019)

-

8. Lee, M.C., Gao, B., Zhang, R.: Rare query expansion through generative adversarial networks in search advertising. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. pp. 500–508. ACM (2018)

-

9. Lian, Y., Chen, Z., Hu, J., Zhang, K., Yan, C., Tong, M., Han, W., Guan, H., Li, Y., Cao, Y., et al.: An end-to-end generative retrieval method for sponsored search engine–decoding efficiently into a closed target domain. arXiv preprint arXiv:1902.00592 (2019)

-

10. Loper, E., Bird, S.: Nltk: the natural language toolkit. arXiv preprint cs/0205028 (2002)

-

11. Riezler, S., Liu, Y.: Query rewriting using monolingual statistical machine translation. Computational Linguistics 36(3), 569–582 (2010)

-

12. Robertson, S.E., Walker, S., Jones, S., Hancock-Beaulieu, M.M., Gatford, M., et al.: Okapi at trec-3. Nist Special Publication Sp 109, 109 (1995)

-

13. See, A., Liu, P.J., Manning, C.D.: Get to the point: Summarization with pointergenerator networks. arXiv preprint arXiv:1704.04368 (2017)

-

14. Wu, Y., Schuster, M., Chen, Z., Le, Q.V., Norouzi, M., Macherey, W., Krikun, M., Cao, Y., Gao, Q., Macherey, K., et al.: Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144 (2016)

-

15. Yan, Y., Qi, W., Gong, Y., Liu, D., Duan, N., Chen, J., Zhang, R., Zhou, M.: Prophetnet: Predicting future n-gram for sequence-to-sequence pre-training. arXiv preprint arXiv:2001.04063 (2020)

-

16. Ye, N., Fuxman, A., Ramavajjala, V., Nazarov, S., McGregor, J.P., Ravi, S.: Photoreply: Automatically suggesting conversational responses to photos. In: Proceedings of the 2018 World Wide Web Conference. pp. 1893–1899. International World Wide Web Conferences Steering Committee (2018)